Shadows of Evidence

We walk around the world with a bewildering network of opinions, beliefs and judgments, some of them ephemeral (I think that I won’t need an umbrella today) and some vital (I trust that person).

Why is the sky blue? Why do you believe you are mortal? Why is four twice two? How do you know that George Washington actually existed?

If we have an opinion about such a question, then when we are challenged, we offer what we think counts for evidence. We hope, of course, that our evidence persuades our challenger (i.e., we hope that our rhetoric is up to the task), but, more importantly, we hope that our evidence really justifies our opinion.

It is striking, though, how different branches of knowledge — the humanities, the sciences, mathematics — justify their findings so very differently; they have, one might say, quite incommensurate rules of evidence. Often a shift of emphasis, or framing, of one of these disciplines goes along with, or derives from, a change of these rules, or of the repertoire of sources of evidence, for justifying claims and findings in that field. Law has, of course, its own precise rules explicitly formulated.

1 As is perfectly reasonable, Darwin reserves the word “fact” for those pieces of data or opinion that have been, in some sense, vetted, and are not currently in dispute. The word “evidence” in “On the Origin of Species” can refer to something more preliminary that is yet to be tested and deemed admissible or not. Sometimes, if evidence is firmer than that, Darwin will supply it with an adjective such as “clear” or “plainest”; it may come as a negative, such as “there isn’t a shadow of evidence.”

Even the way the word “evidence” is used can already tell us much about the profile of an intellectual discipline. To take a simple example, consider Charles Darwin’s language in “On the Origin of Species”— specifically, his use of the words “fact” and “evidence” — as offering us clues about the types of argumentation that Darwin counts in support, or in critique, of his emerging theory of evolution.1 Sometimes Darwin provides us with a sotto voce commentary on what shouldn’t count — or should only marginally count — as evidence, such as when he writes, “But we have better evidence on this subject than mere theoretical calculations.”

Darwin spends much time offering his assessment of what one can expect — or not expect — to glean from the fossil record. He gives quick characterizations of types of evidence — “historical evidence” he calls “indirect” (as, indeed, it is in comparison with the evidence one gets by having an actual bone in one’s actual hands). These types of judgments frame the project of evolution.

The subsequent changes in Darwin’s initial repertoire, such as evidence obtained by formulating various mathematical models, or the formidable technology of gene sequencing, and so on, mark changes in the types of argument evolutionary biologists regard as constituting a genuine result in the field — in effect, changes in what they regard evolutionary biology to be.

If we accept that the shape and mood of a field of inquiry is largely, or even just somewhat, determined by the specific kinds of evidence needed to have consensually agreed-upon findings or results in that field, it becomes important to study the perhaps peculiar nature of evidence in different domains to appreciate how these distinct domains fit into the greater constellation of intellectual effort.

Mathematics — a realm in which one might think the issue of evidence to be fairly straightforward (you prove a theorem or you don’t) — will, as we shall see, turn out to be not at all clear, and it has its own history of the shaping of types of evidence.

In the fall semester of 2012 I had the pleasure of co-running a seminar course, “The Nature of Evidence,” in the Harvard Law School with Professor Noah Feldman. It was structured as an extended conversation between different practitioners and our students. A number of experts contributed to lectures and discussions. We found it very useful to learn in some specificity from people in different fields, via concrete examples graspable by people outside the field, what evidence consists of in physics, economics, biology, art history, history of science, mathematics and law.

Once one looks with a microscope at the structure of evidence in any of these fields, even though this structure is quite specific to the field, and a moving target, the project of understanding it in a larger context is very much worth doing. While studying concrete examples in different fields, we aimed for a comprehensive view and not merely a fragmented “evidence-in-X, evidence-in-Y, etc.,” with no matrix to tie these bits together.

The following has been loosely adapted from a class handout I put together as preparation for our students — few of them mathematicians — for my own presentation specifically regarding evidence in mathematics.

Evidence in Mathematics

The Plan

People in mathematics, or in the sciences related to mathematics, know well that the issue of evidence, as it pertains to how one studies math, or engages in its practice, or does research in it, is not a simple matter. It is not “a result is proved or it isn’t and that’s the end of it.” The very complexity of different types and different moods of evidence, all intermingling in a subject which is as exact as mathematics undeniably is, has intrigued me for years.

One of my goals is to get a slightly better sense of the way various “faces of evidence” play a role in my mathematical work. I also believe that it is just a good idea to become aware of the various distinct profiles of evidence in a number of disciplines, and to understand how these profiles change in time, how they shape and delimit and define those disciplines, and how they influence the interaction between disciplines. Such reflection may enable any of us to appreciate (and view) our own work in a deeper way.

2 For one of the many applications of mathematics to provide evidence in law, see Poincaré et al on the Dreyfus case.

Although we’ll review, below, an annotated vocabulary list of terms related to evidence, I won’t give an all-encompassing definition of the phrase “mathematical evidence” itself. I’ll only mention here that I want to construe it as meaning something much more extensive than numerical or statistical evidence, or mathematical proof, or even evidence related to the enterprise of producing appropriate mathematical models meant for application to other branches of knowledge.2

We will get into the issues of conjecture, proof, rigor and the more easily recognized ways of accumulating and labeling evidence, but to convince you that there are yet other types of evidence playing their roles in pure mathematics, here are three simple examples.

“Visual” Evidence

Consider the rise of the fractals of Benoit Mandelbrot.

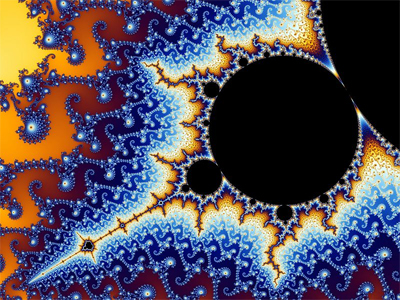

Up to the end of World War I, the theory that was the progenitor of “fractals” was called Fatou-Julia theory. That theory studied the structure of certain regions in the plane that are very important for issues related to dynamics. Surely, Fatou or Julia would not have been able to make too exact a numerical plot of these regions. And unless you plotted them very accurately, they would show up as blobs in the plane with nothing particularly interesting about their perimeters — something like this:

Partly due to the ravages of World War I, and partly because of the general consensus that the problems in this field were essentially understood, there was a lull of half a century in the study of such planar regions, now often called Julia sets.

But in the early 1980s Mandelbrot made (as he described it) “a respectful examination of mounds of computer-generated graphics.” His pictures of such Julia sets, and of related planar regions now called Mandelbrot sets, were significantly more accurate and tended to look like the figure below (which is a more modern version of the ones Mandelbrot produced).

From such pictures alone it became evident that there is an immense amount of structure to the regions drawn and to their perimeters. This almost immediately re-energized and broadened the field of research, making it clear that very little of the basic structure inherent in these sets had been perceived, let alone understood. It also suggested new applications. Mandelbrot proclaimed, with some justification, that “Fatou-Julia theory ‘officially’ came back to life” on the day that he displayed his illustrations in a seminar in Paris.

Computers nowadays, as we all know, can accumulate and manipulate massive data sets. But they also play the role of microscope for pure mathematics, allowing for a type of extreme visual acuity that is, itself, a powerful kind of evidence.

The Evidence of Coincidence

In the early 1970s, the mathematician John McKay made a simple observation. He remarked that

196,884 = 1 + 196,883

3 196,884 is the first interesting coefficient of a basic function in that branch of mathematics: the elliptic modular function.

4 196,883 is the smallest dimension of a Euclidean space that has the largest sporadic simple group (the monster group) as a subgroup of its symmetries.

5 McKay gave a convincing interpretation of the 1 in the formula as well.

What is peculiar about this formula is that the left-hand side of the equation, i.e., the number 196,884, is well known to most practitioners of a certain branch of mathematics (complex analysis, and the theory of modular forms),3 while 196,883, which appears on the right, is well known to most practitioners of what was in the 1970s quite a different branch of mathematics (the theory of finite simple groups).4 McKay took this “coincidence” — the closeness of those two numbers5 — as evidence that there had to be a very close relationship between these two disparate branches of pure mathematics, and he was right! Sheer coincidences in math are often not merely sheer; they’re often clues — evidence of something missing, yet to be discovered.

Evidence Coming As a New Clue, Midstream

The Monty Hall Probability Puzzle is a problem extracted from a famous game from an old TV show (“Let’s Make a Deal”) in which — at least as I’ll recount it — if you win, you win a goat. The relevant issue, for us, is given by the following general ground rules of the game: you are faced with three closed doors (in a row, onstage) and are told that the goat is behind one of those doors, there being nothing behind the other two.

All you have to do is open the right door, i.e., the door with the goat, and you win. But you are asked, first, to indicate what door you intend to open, without actually opening it. At this point, Monty Hall, the generous host of the show, will offer to help you make your final choice by opening one of the other two doors (behind which there is nothing), and then he will say: “You are free to revise your original choice.”

What should you do (assuming, of course, that you actually want that goat)? Are your chances of winning independent of whether or not you change your preliminary choice? Or is there a clear preference for one of the two strategies: sticking to your guns, or switching?

Here is an exercise: Come up with an answer to the question above and give an argument defending it. Note that this problem is very extensively covered and discussed on the Internet, but if you haven’t encountered it before, there is some benefit to thinking it through independently. Only then is it fun, if you want, to see what Google has to say about it.

The point for us is that evidence in mathematical reasoning sometimes arises in slant ways. The structure of this simple game reminds me of a certain computational strategy that makes judicious choices from an inventory of different algorithms, and switches from one algorithm to another depending on new clues that come up in mid-computation.

But these are only three of the many forms in which evidence presents itself in pure mathematical research. Of course, the physical sciences have been providing evidence for mathematical truths beginning with the earliest astronomical observations to the most current work in string theory.

A Short Annotated Vocabulary List

Self-Evidence

In one of our founding documents, the assertion “all men are created equal” is proclaimed to be a self-evident truth, but 87 years later it was demoted to a mere proposition, i.e., something that needs proof.

A tricky notion, self-evidence: I find that I blithely claim self-evident status for lots of my own private opinions, my interpretations of fact, and a motley assortment of other sentiments. It goes without saying that I do all this without any justification; I have no need of it!

The term “self-evident” itself is not often found in the mathematical literature. Nevertheless, the various starting points of mathematical work such as the technology of basic logic (which incorporates the syllogism, the law of the excluded middle, and so on) were taken at one time to be self-evident — or at least were taken as requiring only informal, conversational justification, if any, until in later mathematical developments it was discovered that these “starting points” needed to be better understood and had to be quite substantially constrained or reconfigured.

The (so-called) crisis in the foundations of mathematics forces mathematicians to take a far less relaxed view of the basic technology of logic — we’ll see a bit of this below.

The starting presuppositions6 in Euclid’s “Elements of Geometry” are called “common notions,” not “self-evident axioms.” We’ll examine this as well, including the story of the parallel postulate.

6 The question of which direction you pursue from the “starting point” is a theme of Book 6 of Plato’s dialogue “The Republic,”where — as Socrates would have it — the mathematician builds constructions on unexamined assumptions, while for the dialectician (i.e., the philosopher) assumptions are not “absolute beginnings but are literally hypotheses, i.e., underpinnings, footings, and springboards so to speak, to enable [the conversation] to rise to that which requires no assumption and is the starting-point of all.”

One big difference between the two labels “self-evident axioms” and “common notions” is, of course, that the “self” of the first label emphasizes a certain “self-sufficiency”: you alone are the judge of how evident it is (to you). The “common” of “common notions” alludes to either a pre-established consensus or some kind of tacit accord, possibly of the universally subjective or “allgemeine Stimme” status that Kant tried to describe in “The Critique of Judgment.”7

Here is a little thought exercise in mathematical framing:

7 One can gauge how important implicit consensus is, as an ever-present concern and armature in mathematical argument, just by totting up the number of times phrases like “well known” or the passive “it is clear that” are used in mathematical treatises.

8 One natural way, for people who know some calculus — or, more specifically, are happy with the concept “continuous” — is to cast the problem on the Cartesian plane. Even with that, special care must be taken in defining what it means for points to be inside or outside the closed loop. If you define these notions “inside” and “outside” in a certain way, the “claim” will be true by definition; if in some other way, it will require proof.

9 I’ve formulated it slightly more generally than as it occurs in the classical literature (where it is called the intermediate value theorem).

Construction: On a piece of paper (meant to stand for the Euclidean plane) draw, with one stroke of the pen, a circle, or any closed loop. Now try to connect a point inside the drawn circle to a point outside the circle by some continuous curve.

Claim: Your continuous curve will, at some point, intersect the circle.

Now the exercise is to think about the possible status of this simple construction and the claim following it. First, do you think that “it” — i.e., the assertion — is true? The quotation marks around “it” are there simply to note that the claim itself and the construction are hardly well defined mathematically. To pin them down, we must put them into some kind of formal context. In a word, we have to “model” the construction and claim in some way.8 Second, do you think that the claim should be framed in a way that makes it self-evident, or as requiring proof? I might mention here that the substance and status of this very claim9 was the topic of heated debate about the foundations of mathematics at the beginning of the 20th century (as we’ll discuss later).

Well Defined

This notion gets to an essential difference between mathematics and other realms of thought. Among all the other things that it is, mathematics is the art of the unambiguous.

It is almost uncanny how mathematics has the capability of achieving such a high level of exactitude in its definitions and assertions. Thanks to this, we can be “originalists” with regard to fifth-century B.C. mathematical texts at a level that would be ludicrous to expect when puzzling over the U.S. Constitution.

Often much is made in mathematical logical circles of how crucial it is for theories to be consistent. This is true enough, but it is a piece — admittedly, the essential piece — of a larger day-to-day issue that arises when thinking about mathematics; namely, the importance of being sensitive to ambiguities of all sorts (and not only to the crisis that would occur if the same proposition were to be provably both true and false).

Here is an easy mathematical example that might give you a sense of how finely tuned mathematicians are to the question of ambiguity.

11 That is, at least two of its sides are equal.

12 Or, if you prefer, in the jargon of plane geometry, they may be said to achieve “SAS.”

Consider a pair of scalene triangles10 ABC and DEF that are congruent. Compare that situation with a pair of triangles GHI and JKL that are congruent, where one of the triangles (hence the other as well) is isosceles.11

Now there is a structural difference between these two situations; modern algebra strongly presses home to its practitioners how important it often is to keep such differences in mind. Namely, in the first, scalene, case the congruence itself between ABC and DEF is unique in the sense that there is only one way of pairing the vertices of the first with the vertices of the second so as to achieve a perfect overlap of the two triangles.12 In the second, isosceles, case, by contrast, there are at least two distinct congruences.

To rephrase our example a bit more generally and succinctly: It can be important to distinguish between knowing that two objects are merely equivalent, and knowing more firmly that they are equivalent via a uniqueequivalence.

Plausible Inference

I imagine that the legal profession has lots to teach scientists and mathematicians about the subtly different levels of plausibility in inferential arguments.

Mathematicians may be known for the proofs they end up discovering, but they spend much of their time living with mistakes, misconceptions, analogies, inferences, partial patterns that hint at more substantial ones, rules of thumb, and somewhat systematic heuristics that allow them to do their work. How can one assess the value of any of this mid-process? Here are three important modes of plausible reasoning.

- reasoning from consequence

- reasoning from randomness

- reasoning from analogy

This taxonomy is taken from an article I’m about to publish, entitled “Is It Plausible?” The first of these modes is largely non-heuristic, while the other two are heuristic, my distinction being:

- A heuristic method is one that helps us to actually come up with (possibly true, and interesting) statements and gives us reasons to think that they are plausible.

- A non-heuristic method is one that may be of great use in shoring up our sense that a statement is plausible once we have the statement in mind, but is not particularly good at discovering such statements for us.

I recommend that (for fun) you take a look at a few pages of this classical expository treatise on plausible inference in mathematics:

G. P´olya, Mathematics and Plausible Reasoning, Vol. 1, Induction and Analogy in Mathematics, Vol. II, Patterns of Plausible Inference (Princeton, N.J.: Princeton University Press, 1956–1968).

Two Mathematical Issues

The problems involved in the so-called “crisis in foundations” are related to the use of the two words “all” and “exist.”

All: Now, it is perfectly OK to make statements about “all people” since, although “corporations” may or may not be included, the universe (of people) referred to is fairly well defined. If, however, you begin a thought with the phrase “All thoughts,” you are quantifying thoughts over a moving terrain, since your very new thought is, after all, a “thought,” which therefore changes the range over which you are making your assertion, resulting in a sort of Winnie-the-Pooh conundrum. This may have seemed harmless until Bertrand Russell showed that such kinds of insouciant universal quantification led to contradictions.

Exist: When you use indirect argument to show that some “thing” — call it X — exists, you have in your hands a powerful tool. Its subtleness is that you aren’t required to put your finger on that X you are trying to show exists; all you need do is discredit the assertion “X does not exist.” The issue of whether or not discrediting such an assertion is enough to confer ontological status to X is what is hotly disputed.

If you questioned Hilbert, Kronecker, Brouwer, Frege, Russell and Gödel regarding their stance on the issues related to the two terms above, you’d get significantly different answers.

For some fundamental background here, you need only read the first four pages of David Hilbert’s classic essay “On the Infinite” and the related essays of L. E. J. Brouwer, Frege, Russell and G¨odel, all reprinted in Jean van Heijenoort’s From Frege to G¨odel: A SourceBook in Mathematical Logic, 1879–1931 (Cambridge: Harvard University Press, 1967).

For very a different (and interesting!) take on the same subject matter, see Apostolos Doxiadis, Christos H. Papadimitriou, Alecos Papadatos, and Annie Di Donna, Logicomix:An Epic Search for Truth (New York: Bloomsbury, 2009).

Euclid’s Parallel Postulate and Its “Evolution”

If a line segment intersects two straight lines forming two interior angles on the same side that sum to less than two right angles, then the two lines, if extended indefinitely, meet on that side on which the angles sum to less than two right angles.

For a very readable introduction to the history of “reactions” to this postulate, including attempts to actually prove it, using only the other Euclidean axioms, I recommend visiting the website Cut the Knot, run by Alexander Bogomolny. Specifically, see:

The change of status of this postulate is related to the evolution of the concept of “models” in pure mathematics.

Regarding models and the strain on models posed by experimental mathematics and massive computation, Peter Norvig, Google’s research director, pronounced the following dictum:

“All models are wrong, and increasingly you can succeed without them.”

This sentiment was taken up by Chris Anderson in a recent issue of “Wired” magazine and extensively commented on by the mathematician George Andrews.13 Anderson noted that traditional science depended on model formation. He went on to say:

The models are then tested, and experiments confirm or falsify theoretical models of how the world works. This is the way science has worked for hundreds of years. Scientists are trained to recognize that correlation is not causation, that no conclusions should be drawn simply on the basis of correlation between X and Y (it could just be a coincidence). Instead, you must understand the underlying mechanisms that connect the two. Once you have a model, you can connect the data sets with confidence. Data without a model is just noise. But faced with massive data, this approach to science ( hypothesize, then model, then test) is becoming obsolete. Consider physics: Newtonian models were crude approximations of the truth (wrong at the atomic level, but still useful). A hundred years ago, statistically based quantum mechanics offered a better picture but quantum mechanics is yet another model, and as such it, too, is flawed, no doubt a caricature of a more complex underlying reality. The reason physics has drifted into theoretical speculation about n-dimensional grand unified models over the past few decades (the “beautiful story” phase of a discipline starved of data) is that we don’t know how to run the experiments that would falsify the hypotheses: the energies are too high, the accelerators too expensive, and so on. Now biology is heading in the same direction. … In short, the more we learn about biology, the further we find ourselves from a model that can explain it. There is now a better way. Petabytes allow us to say: “Correlation is enough.” We can stop looking for models. We can analyze the data without hypotheses about what it might show. We can throw the numbers into the biggest computing clusters the world has ever seen and let statistical algorithms find patterns where science cannot.14

But correlation alone will never replace the explanatory power of mathematics. Mathematics, the word, comes from the Greek µάθηµα, which means nothing less than “that which is learned,” a phrase that has an all-encompassing grandeur. No wonder, then, that to teach mathematics is so formidable a task; you are grappling with the pure essence of learning. To teach effectively, you had better use every species of true persuasion, of genuine evidence, that you can bring to the picture, with the rigor of proof as its frame. Providentially, there is a rich palette of different types of evidence aiding us when we learn “that which is learned,” and I hope I’ve given a hint of it.

Barry Mazur is a mathematician at Harvard University currently working in number theory. He is the author of “Imagining Numbers” (particularly the square root of minus 15), published by Farrar, Straus and Giroux (2003), and the recipient of the 2011 National Medal of Science.

•••

13 G. Andrews, “Drowning in the Data Deluge,” Notices of the American Mathematical Society 59 (2012): 933–41.

See also:

- G. E. Andrews, “The Death of Proof? Semi-Rigorous Mathematics? You’ve Got to Be Kidding!” Mathematical Intelligencer 16 (1994): 16–18.

- J. Borwein, J. P. Borwein, R. Girgensohn, S. Parnes, “Making Sense of Experimental Mathematics,” Mathematical Intelligencer 18 (1996): 12–18.

and two books:

- J. Borwein and D. Bailey, Mathematics by Experiment: Plausible Reasoning in the 21st Century Natick, MA: AK Peters, 2003).

- J. Borwein, D. Bailey, and R. Girgensohn, Experimentation in Mathematics: Computational Paths to Discovery (Natick, MA: AK Peters, 2004).

14 I want to thank Stephanie Dick who provided me with the following three references regarding a related topic; namely, automatized proofs in mathematics.

- Donald MacKenzie, “Computing and the Culture of Proving,” Philosophical Transactions of the RoyalSociety 363 (2005): 2335–50.

- Donald MacKenzie, “Slaying the Kraken: The Sociohistory of Mathematical Proof,” Social Studies ofScience 29 (1999): 7–60.

- Hao Wang, “Toward mechanical Mathematics”, IBM Journal of Research and Development, Volume 4, Issue 1 (January 1960): 2–22.

For discussions of similar issues, but with attention paid to changes in attitudes towards foundations, see:

- Leo Corry, “The Origins of Eternal Truth in Modern Mathematics: Hilbert to Bourbaki and Beyond,” Science in Context 12 (1998): 137–83.

- D. A. Edwards and S. Wilcox, “Unity, Disunity and Pluralism in Science” (1980), http://www.arxiv.org/pdf/1110.6545

- Arthur Jaffe, “Proof and the Evolution of Mathematics,” Synth`ese 111, no. 2 (1997): 133–46.