Symposium: Evidence in the Natural Sciences

New technical approaches in science and mathematics are enabling researchers to explore more complex issues than ever before. However, the new techniques are also forcing scientists to challenge basic assumptions — including the traditional notions of evidence and proof.

For example, the use of large-scale computer calculations in the traditionally paper-and-pencil discipline of mathematics has prompted researchers to reevaluate the whole idea of what makes a proof. In the life sciences, meta-analyses enabled by new statistical tools have raised doubts about the reliability of published literature. And while spectacular progress in observations and theories has provided us with a remarkably clear picture of events that occurred 13.8 billion years ago, quantum mechanics, a fundamental ingredient in this understanding, struggles to reconcile evidence with a plausible model of reality.

Nine speakers discussed these and related issues at a symposium held on May 30, 2014, in the Gerald D. Fischbach Auditorium at the Simons Foundation in New York City. Titled “Evidence in the Natural Sciences,” the all-day conference was jointly sponsored by the Simons Foundation and the John Templeton Foundation with the goal of eliciting an exchange of ideas about the changing nature of evidence in the modern scientific endeavor.

How do you fill a space with as many spheres as possible? This question has long preoccupied Thomas Hales, a professor of mathematics at the University of Pittsburgh and the first speaker at the symposium. “Every grocer knows the answer to this problem,” he said. “It’s just the ordinary cannonball arrangement,” in which each sphere is cradled below by three spheres abutting in a triangle. Johannes Kepler first proposed this conjecture in 1611, but rigorously proving it hasn’t been easy. Over the centuries, many mathematicians have tried and failed.

In 1998, Hales and his graduate student Samuel Ferguson announced a proof consisting of nearly 300 pages — plus at least 40,000 lines of custom computer code. Hales recalled in his talk that the journal Annals of Mathematics assigned a dozen referees “to check that there was not a single error anywhere in this proof.” But when the unabridged version was finally published in 2005, the editor conceded that referees could not certify its correctness “because they have run out of energy to devote to the problem.”

The use of computers in pure mathematics predates Hales and Ferguson’s work, but since their paper’s publication it has become much more common. The difficulty, according to Hales, is that “computers have bugs, and mathematicians don’t like bugs.” He acknowledged that his 1998 proof contained bugs.

Undaunted, Hales tackled the problem using an approach known as “formal proof,” in which the fundamental rules of logic and axioms of mathematics are programmed into a computer as the basis for a proof. Now almost finished, Hales’ proof contains a “trusted kernel” of computer code filling only about 400 lines, though it still requires 20,000 hours of computer time. The payoff is that human referees won’t be needed to check it; a computer will do the job.

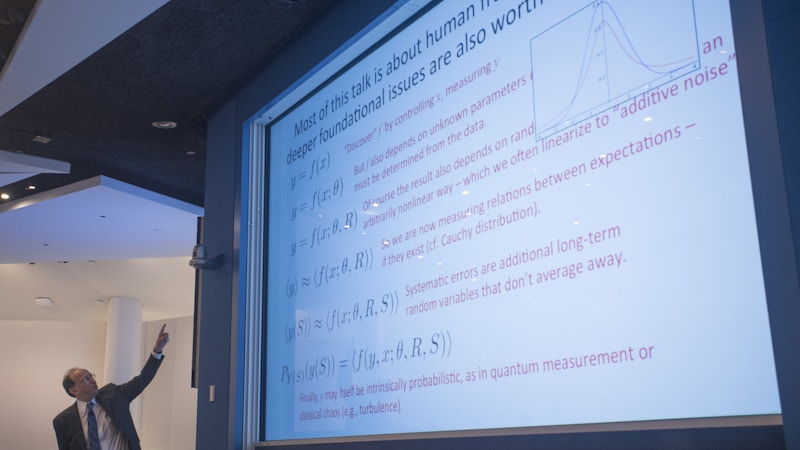

The next speaker, David Donoho, a professor of statistics and of humanities and sciences at Stanford University, discussed recently generated evidence in biomedical research that strongly indicates “systematic failure at the scale of millions of papers being wrong.” That conclusion is based on meta-analyses, in which computers aggregate the results of many small clinical studies, thereby allowing researchers to estimate a treatment effect based on large numbers of patients and with a small margin of error. “It’s very much the case that the effects are weaker as trial sizes increase,” Donoho said. In fact, the effect often shrinks to zero, he added, “but not always — there are effective treatments out there.”

According to Donoho, data collected by his fellow Stanford professor John Ioannidis and others surveying the corpus of published literature show that many papers cannot be replicated and that incorrect findings tend to have more impact than correct findings. This undesirable situation, he said, contributes to the high failure rates of clinical trials. One of his slides showed that from 2007 to 2011, 78 percent of Phase II trials failed, as did 35 percent of Phase III trials.

Donoho attributed the problem to three factors: a plethora of small, “underpowered” studies; a bias toward publishing only positive results even though negative results can be more informative; and a tendency among researchers to view themselves as artists inspired by ideas about how to analyze data. As a scientist-artist, “you try lots of ideas, and you see what sticks,” he said. “And what sticks is what gives you a positive result.”

Donoho’s recommendation — similar, he noted, to that of Hales — was “to get the human out of the equation.” To remove the subjective element in biomedical science, he sketched out a system that would computerize the process of proposing, simulating and refereeing studies. Both positive and negative results would be plugged into this system.

The third speaker, William Press, a professor of computer science and integrative biology at the University of Texas, Austin, disagreed with Donoho on one point. For many types of scientific research, he said, “I think that exploratory statistics — that’s what David [Donoho] called art — is very important.”

Press asserted that studies are plagued not only by random measurement “noise” (which can often be statistically averaged away) but also by systematic errors that can remain undetectable for years until someone discovers an alternative way of doing an experiment. Efforts to address the inability to reproduce a study have focused on better communication of scientific findings.

In May 2013, the Nature group of journals established a checklist of items that the authors of papers must report. The journals also signaled their intent to begin consulting statisticians about certain papers. The editor of Science, meanwhile, wants to ask reviewers to flag particularly good papers so that future symposia can determine best practices in a field.

Press expressed skepticism about such proposals. “Slavish statistical rituals are part of the problem,” one of his slides asserted. Instead of focusing on the best papers in a field, he suggested instead that perhaps scientists should “flog the bad papers in public.” (Press was not referring to papers resulting from intentional scientific misconduct, which he characterized as “a very small part of the problem.”)

Press had several other recommendations for the biomedical sciences: First, promote self-critical thinking by requiring authors to submit an essay to journals, along with their paper, on the three likeliest ways the paper could be wrong. (The essay wouldn’t be published.) Second, require higher levels of statistical significance. Third, do not publish papers with small but significant results, unless the findings suggest an interesting hypothesis. Finally, publish and publicize more studies with negative findings. “Luckily the Web has come along, and they don’t have to kill trees to do this,” Press pointed out.

Amber Miller, a professor of physics at Columbia University, discussed the different types of direct and indirect evidence that support the Big Bang theory. The hot, dense plasma present at the beginning of the universe, for instance, should have emitted a particular type of blackbody radiation, and the Cosmic Background Explorer satellite detected such radiation. Miller described a graph of that radiation published in 1990 as “one of the most spectacular results I’ve ever seen in physics.” She continued: “We don’t expect ever to be convinced that the universe did not start out hot and dense. That’s on very firm footing.”

Miller went on to discuss the difficulties scientists often have in communicating with the public. They create “the impression that things that are known are overturned all the time,” she explained, and the public consequently does not believe anything they say. That disbelief, she added, proves “very harmful” when policymakers want to turn to science to address problems such as global warming. Scientists should instead stress that new evidence refines, rather than destroys, theories. “A paradigm shift in modern cosmology doesn’t mean that everything that came before it was wrong,” Miller said. “It means we have a better, more complete way of thinking about something.”

Another mistake scientists make, Miller said, is to overstate what their evidence proves. As an example, she cited a recent announcement about cosmological inflation, the super-rapid expansion that the universe is thought to have undergone in its first 10-35 second. At a press conference in March, a group claimed that unpublished observations from the BICEP2 telescope at the South Pole supported the inflation theory to such an extent that, according to the group’s abstract, “a new era of … cosmology has begun.” For Miller, that sentence was a clear case of sensationalism. Indeed, after the BICEP2 announcement, a number of papers appeared, arguing that currently available evidence is not sufficient to support the group’s claim.

One reason scientists may misjudge the strength of their hypotheses is that the types of evidence recognized as proof are constantly evolving. Later in the symposium, Peter Galison, a professor of the history of science and physics at Harvard University, discussed two evidentiary approaches in particle physics. Starting in the Victorian age, the so-called “image tradition” visualized subatomic particles in bubble chambers. The pictures “became one of the most striking triumphs of the atomic theory,” Galison said, adding, “Making the atomic world visible was a shattering, explosive development for physics.”

The second evidentiary approach was the “logic tradition,” which began decades later. In its simplest form, particles were introduced into a cathode/anode tube with a big voltage difference, thereby creating sparks that could be counted. Instead of generating pictures, this approach created vast arrays of numbers that could be statistically analyzed.

Galison observed that each of the two traditions had its strengths and weaknesses: The image tradition, while it graphically captured what was happening in an experiment, did not always focus selectively enough and could overwhelm researchers with complex data that were impossible to analyze. The logic tradition allowed for more data selectivity but could inadvertently exclude something interesting by focusing on the wrong thing. Eventually, Galison noted, projects began to incorporate both approaches, but that process was not without some friction as scientists grounded in one tradition were forced to accept the other.

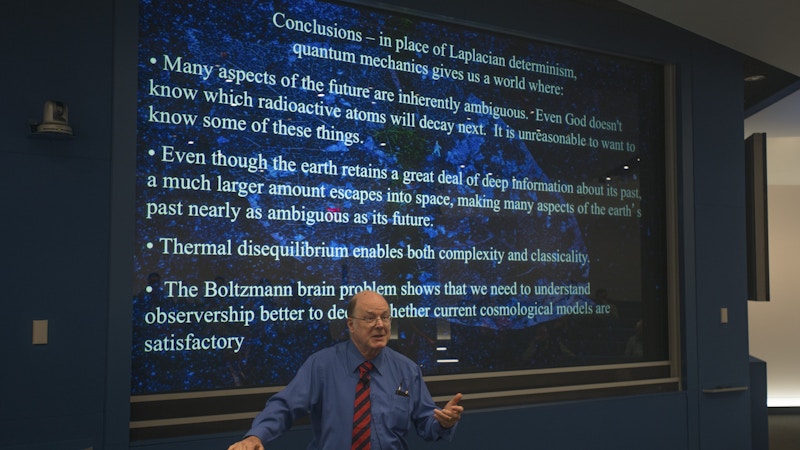

Both traditions have provided evidence to support quantum mechanics, one of the reigning theories of physics. Nevertheless, the symposium’s final speaker, Tim Maudlin, a professor of philosophy at New York University, contended that physics is “a complete and total disaster, and has more or less been so since the invention of quantum mechanics.”

Quantum mechanics deals with physical phenomena at the atomic and subatomic levels and views light as both a wave and a particle. Over the past century, quantum mechanics has amassed an impressive record of successful predictions that culminated two years ago in the discovery of the Higgs boson, the last missing piece in the standard model of particle physics. The probability of getting this result just by chance was one in 3.5 million.

But quantum mechanics fails to satisfy Maudlin’s ambition to know what the world is like. “You want to ask yourself, what does this theory claim about the physical world?” he said. “And the problem is, at present, there is zero agreement at a fundamental level among physicists about how to understand the theory.” He contrasted quantum mechanics with another pillar of modern physics, the general theory of relativity, which deals with gravity. “If you study the general theory, you come to understand it pellucidly from beginning to end. There really aren’t conceptual problems.” At the end of his talk, Maudlin noted that evidence generally exists on a macroscopic scale, and therefore it is difficult to determine, through experiments, which of the microscopic scenarios proposed by quantum mechanics best describes reality.

In a different take on the subject, Charles Bennett of IBM Research discussed the durability of evidence. He observed that most macroscopic information in the world is eventually lost “unless it’s lucky enough to get fossilized or photographed.” Bennett then posed a question: As new scientific techniques generate more and more evidence, what should become of this evidence? Which information is worth preserving, and how should such preservation be carried out? Bennett’s own opinion was that information with complexity and “logical depth” (concepts that he said could be defined mathematically or computationally) should be preserved. A living organism or a nonliving object can display complexity, he said, if it “would be hard to replace” after it was destroyed. One example of the latter would be the last copy of a good book — a compilation of plays by Shakespeare, say, but not a blank volume.

During his presentation, Hales asked: “So what happens to mathematics when we move away from paper proofs to computer proofs, as we move away from simple arguments to complex arguments that take hundreds or even thousands of pages to write down?” Thorny questions like this, even if not so starkly expressed, permeated the symposium. Complexity is an attribute of modern science and mathematics that is bound to increase; as a result, the way we think about evidence and proof will have to evolve. The talks and discussions helped to characterize the issues involved and point to possible ways forward.

See Evidence Day speaker videos.