How the Cerebellum Learns to Learn

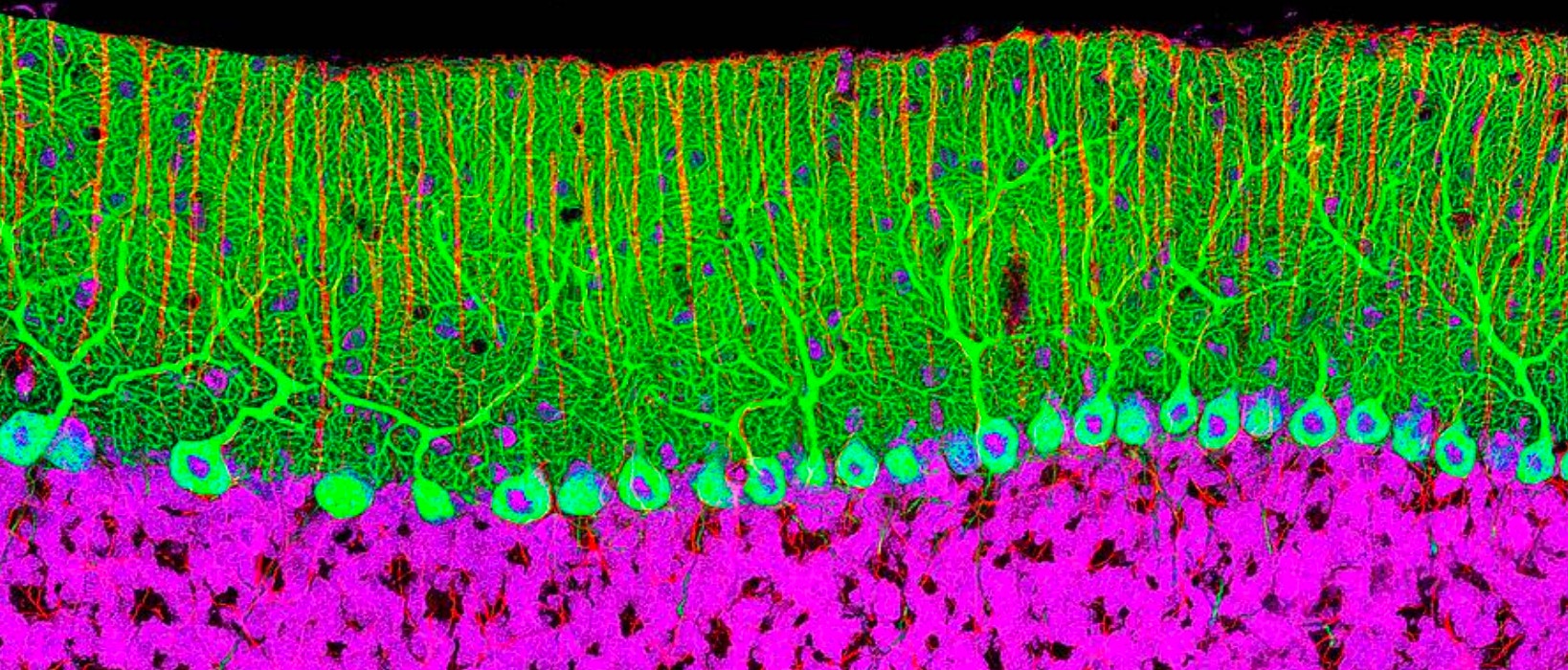

The cerebellum, classically known for its role in motor learning, possesses an almost crystalline structure. Mossy fibers and climbing fibers carry information into the cerebellum, eventually passing it along to exquisitely branched Purkinje cells, the structure’s output cell. These circuits use incoming sensory information to regulate motor commands, helping to make movements more accurate. They maintain balance, for example, by modifying motor signals in response to changes in body position.

Researchers now know that the cerebellum is involved in a wide range of cognitive functions, including decision-making, locomotion and reward processing. Because these diverse functions are supported by a common circuit architecture, deciphering how they work could allow researchers to uncover shared computational principles.

The regular structure and well-defined anatomy make the cerebellum particularly fertile ground for the exploration of neural plasticity and the ways neural circuits change in response to experience. Jennifer Raymond, a neuroscientist at Stanford University, has spent the last 20 years investigating the algorithms that neural circuits use to tune their performance.

A decades-old theory proposes that climbing fiber input to the cerebellum functions as an error detector, weakening synapses that carry inaccurate information. But a debate has raged in the field over whether this is truly the mechanism by which the cerebellum learns.

Raymond, an investigator with the Simons Collaboration on the Global Brain, has found that the reality is much more nuanced. Despite its stereotyped structure, the cerebellum is more flexible than previously thought and can employ different mechanisms to implement learning. Raymond’s team has also found that cerebellar circuits can modify the properties of their plasticity, a potential mechanism for meta-learning, or learning to learn.

Raymond described her work at the Albert and Ellen Grass Lecture at the Society for Neuroscience meeting in Chicago in October 2019 and later spoke with the SCGB about her discoveries. An edited version of the conversation follows.

What first drew you to the cerebellum? Why is it such a good system for studying learning?

I think the cerebellum’s relatively simple circuit architecture gives us a decent chance of being able to understand how it implements learning. There are five main types of neurons in the cerebellar cortex, compared with dozens in the cerebral cortex. This makes it feasible for scientists like me to integrate across different levels of the organization, from the molecular level up to behavior. Also, Purkinje cells are the sole output neurons, so all computations performed in the cerebellar cortex can only get out through these cells. That provides a nice bottleneck where we can look at the output of its computations.

You mentioned that you are particularly intrigued by plasticity, the ability to tune performance via experience. Why is that such an important phenomenon to study?

All the computations our circuits perform are shaped by plasticity and learning. There is evidence that even things we don’t think of as learned, such as how we process sensory information, are shaped by experience. This is a fundamental feature of how the brain computes. It monitors the success of those computations and updates them to perform them more efficiently the next time.

Cerebellar granule cells make up half of all the neurons in the mammalian brain. Why are they so numerous?

It’s a long-standing question that has been almost impossible to address because the cells are so small and densely packed, making them inaccessible to traditional electrophysiology methods. There are just a handful of recordings of these cells in awake behaving animals. We are working with engineers at Stanford to develop new electrodes to record from granule cells during motor skill-learning tasks to gain insights about how these cells represent and transform the relevant information.

How is plasticity important in the cerebellum?

My lab works on the part of the cerebellum where the outputs are movements. The commands the brain generates for controlling movements are modified by experience. This is true for even very basic movements that we take for granted, like reaching for a glass without spilling it. We learn how to do that through trial and error. If you’ve watched a toddler, you’ve seen this trial and error in action. Then, as the body changes — if you go to the gym, get stronger or weaker — the motor system needs to adjust the commands it sends to muscles to keep performing that movement accurately. The cerebellum contributes to those commands by monitoring performance and implementing changes to motor circuitry if movements aren’t accurate.

What is the traditional theory of how the cerebellum learns?

According to the textbook model, the cerebellum is implementing an error-correcting kind of learning. If certain parallel fiber synapses are too strong and cause an error, the error gets reported to the cerebellum by their climbing fiber inputs. This will weaken the parallel fiber synapses that led to the error, reducing that error in the future.

A key question is the extent to which the error signals carried by climbing fibers control cerebellar learning. There is considerable evidence that climbing fibers carry error signals. But are those errors — and the resulting weakening of parallel fiber synapses — truly the mechanism the cerebellum uses to learn?

In brain slices, activating both parallel fibers and climbing fibers weakens the parallel fiber synapses onto Purkinje cells, a phenomenon called synaptic depression. But actually demonstrating that specific synapses have been modified by learning is still quite challenging. Recording, stimulation and perturbation experiments in our lab provide convergent evidence that depression of these synapses contributes to learning, but the same experimental approaches also indicate that this is not the whole story — that depression of those synapses cannot account for all of the learning that’s observed.

What’s actually happening in the cerebellum during learning is more nuanced. Sometimes the cerebellum implements learning using this mechanism, and sometimes it uses other mechanisms we don’t fully know about yet. There is not one way the cerebellum implements learning, there are multiple ways. If that’s true for the cerebellum, with its stereotyped circuit architecture, it’s almost certainly true in other brain areas.

How are these findings changing our view of how the cerebellum works?

There is a decades-old view that the cerebellum’s simple architecture would do computations and implement learning in a stereotyped way, like a machine. The same process would be used across the cerebellum in all kinds of learning. In contrast, we find that subtle changes in how you do the training can cause different mechanisms to be recruited within the cerebellum to support learning. For example, if you need to learn to make bigger eye movements, the cerebellum seems to use different mechanisms to accomplish that, depending on whether the stimuli used for training are delivered once every two seconds versus once per second. A small change causes different neural mechanisms to get recruited to support the learned increase in eye movement amplitude.

This is important scientifically because it means that tuning the way a circuit computes can occur through a richer set of mechanisms than we thought. It’s important clinically because if one mechanism is compromised, there may be other mechanisms that can still be recruited to accomplish a similar end result, if you can figure out how to tap into those alternative mechanisms.

You have also found a potential solution to an issue with feedback learning — how to match error feedback to the inputs that caused the error.

This is a problem that arises for any kind of learning that uses feedback. The nervous system produces an output, then gets feedback that determines if the output was good or bad. But it’s challenging to implement learning using feedback if there is a delay between neural activity and feedback. How does that feedback selectively modify the synapses that were responsible for the outcome? Theorists call this the temporal credit assignment problem.

We found that in contrast to much of the previous research, some synapses in the cerebellum can selectively undergo plasticity for a fairly long delay. With spike-timing-dependent plasticity, neurons need to be active within 10 to 20 milliseconds of each other. However, in the eye movement part of the cerebellum, plasticity is induced when climbing fibers are active 120 milliseconds after parallel fibers, and not for longer or shorter intervals. The 120-millisecond delay corresponds exactly to the time it takes for the loop from parallel fiber activity to oculomotor error to reporting of that error — the timing requirements for synaptic plasticity are precisely tuned to deal with the feedback delay in the circuit.

If you go to other parts of the cerebellum, plasticity at the same anatomical type of synapse is tuned to different delays. This tuning of plasticity occurs through experience. When we eliminate visual feedback by raising animals in darkness, thereby eliminating the error signals that are used for calibrating eye movements, the rules for synaptic plasticity are totally altered. It is only through experience that the timing rules for synaptic plasticity become matched to the feedback delay in the circuit.

Do you have hypotheses for the mechanism underlying these very different tunable molecular clocks?

Many people are interested in this! We are currently doing experiments to analyze the molecular machinery implementing the tunable synaptic timing, and we are also working with SCGB collaborator Mark Goldman to evaluate whether the models he and others have used to describe neural integration and adaptive timing at the circuit level might also describe what is happening at the molecular level within the Purkinje cell to build a tunable molecular timer.

Do you think the ability to tune the timing requirements for synaptic plasticity is specific to the cerebellum, or is it happening all over the brain?

I would be shocked if only the cerebellum had this property. It’s so powerful to be able to match the rules the synapse is using for learning to meet the demands of the specific circuit in which those synapses are embedded, and the specific learning task the circuit supports.

A lot of learning is feedback-driven, so the temporal credit assignment problem is a challenge for every brain area, not just the cerebellum. We hope that our insights from studying the cerebellum, which is relatively tractable, will inform understanding of feedback-based learning throughout the brain.

Why is this flexibility so significant?

Everyone has assumed there are a few generic rules, such as that spike-dependent plasticity needs presynaptic activity to occur 10 to 20 milliseconds before postsynaptic activity. Lots of synapses use that rule to implement plasticity. But we show there is a lot more specialization that occurs through experience. The timing requirements for inducing plasticity are themselves plastic. We think it’s a mechanism for meta-learning, which is learning how to learn.

We know humans and animals are good meta-learners. Learning one set of skills makes you better at learning another skill. People who know a bunch of languages will learn the next one faster. It’s a general property of biological brains that distinguishes us from machines. The hallmark of intelligence is not just that you can learn — machines can learn, but humans and animals can learn to learn. But very little has been done to figure out how the brain does this. I think we have found maybe the first neural mechanism for learning how to learn.

What’s the next step?

In order for the rules governing plasticity to be adaptively tuned, you need a tunable molecular clock in the cerebellar neurons. We are working on determining the molecular machinery that does this. We are also examining whether the ability of synapses to tune the rules governing plasticity and learning persists into adulthood. Are the synaptic learning rules tuned once during development to match task demands, or are these rules constantly being tweaked throughout life to improve the way learning is implemented? We have some hints it may be the latter.