Recurrent Connections Improve Neural Network Models of Vision

When light hits the retina, it sets off a chain reaction of neural activity that travels through many brain areas. This complex processing stream is responsible for everything vision can do, including recognizing objects and taking in scenes. In the past several years, neuroscientists have demonstrated how convolutional neural networks — models frequently used in the fields of computer vision and artificial intelligence — capture many properties of the visual system in the brain. James DiCarlo, a neuroscientist at the Massachusetts Institute of Technology and an investigator with the Simons Collaboration on the Global Brain, uses these models to dissect how vision works. Two new papers from his lab demonstrate how convolutional neural networks can enhance both our understanding of the visual system and our ability to control it.

In convolutional neural networks, information usually flows in a feed-forward way from the bottom up. An image is put into the network and processed through a series of layers, which eventually produce a useful output, such as the name of the object in the image. The brain has both a feed-forward structure and recurrent pathways: Information can get sent back from one area to a previous one or echo around the same area multiple times. Studies suggest this extra processing helps the brain interpret challenging visual information, such as objects that are occluded or viewed from unusual angles.

The first study, published in Nature Neuroscience in April, expands on this hypothesis. First author Kohitij Kar and collaborators found images that are difficult for a feed-forward model to classify but easy for humans and monkeys to interpret, although they take slightly longer to classify these challenging images than normal ones. This delay suggests that some recurrent processing is involved. The researchers then looked at how neural activity in the monkey’s brain evolves as these images are processed. A benefit of convolutional neural networks is that the response of different layers in the model can be used to predict the response of neurons in different brain areas. Kar and collaborators found that the feed-forward model predicts the activity of neurons fairly well at early stages (up to 0.1 second into the response) but struggles at later time points.

When a convolutional neural network is not performing well, researchers in computer vision tend to add more layers to it, making it ‘deeper.’ The DiCarlo lab tested whether such deeper networks could better predict neural responses to their challenging images, under the assumption that a network with more layers, which compute over space, resemble recurrent pathways, which compute over time. These deeper networks were indeed better than the shallower model at predicting neural activity at later time points. Finally, the authors added recurrent connections to the structure of their original model and found that responses at later time points in the model better matched later time points in the data. Specifically, when recurrent connections were added to this ‘shallower’ network, it predicted neural activity as well as the deeper model did. Overall, this work strongly suggests that recurrent processing is an important contributor to computation in the visual system.

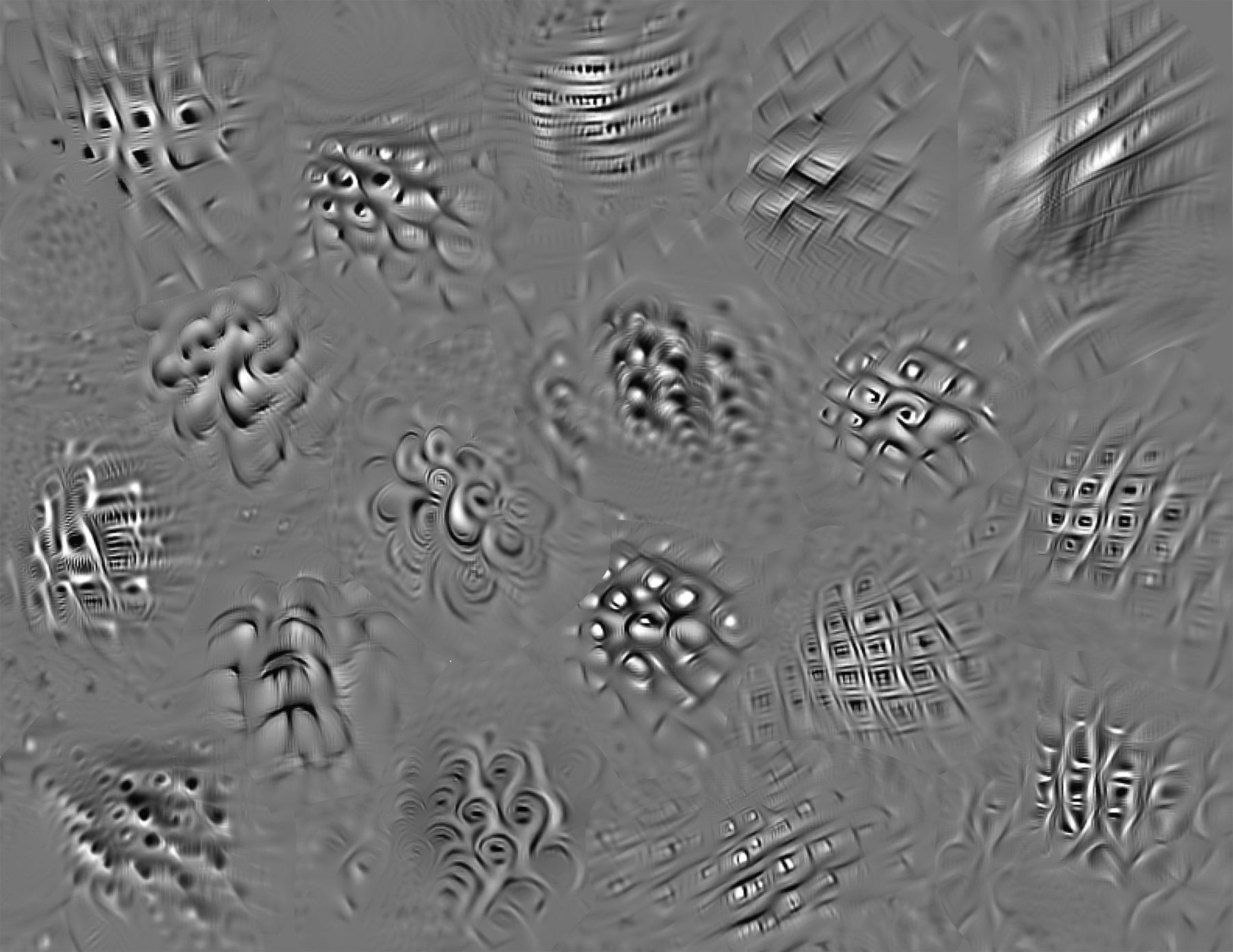

In the second study, published in Science in May, convolutional neural networks were used not just to predict the firing of neurons in the visual system but to control it. Scientists have tried for many decades to discover what kinds of images are best able to trigger neurons in the visual system. Common images for visual area V4, for example, include curved lines and abstract shapes. In this study, first authors Pouya Bashivan and Kar wanted to see if they could design images that would drive the activity of these V4 neurons more strongly than existing images do. To achieve this, they effectively ran their model in reverse: Rather than putting in an image and getting a prediction of neural activity, they put in the desired neural activity and got an image as the output.

The resulting images contained patches of repeating textures and were in fact able to drive neurons to fire at a rate 39 percent higher than the highest firing rate observed in response to regular images. In addition to pushing individual neurons to their extremes, the authors also explored ways of controlling multiple neurons at once. They produced images that drove one neuron’s activity up and others’ down, providing a noninvasive means of controlling neural activity. The fact that these models can create images that successfully drive neurons in the brain bolsters their suitability as models of biological vision.

When neuroscientists previously tried to find images that require recurrent processing or patterns that can drive neurons strongly, they had to rely on assumptions and clever guesses about what matters to the visual system. Convolutional neural networks, which in the words of the authors in the second study are “unconstrained by human intuition and the limits of human language,” can better achieve these goals. This attribute will make them important tools for visual neuroscience.