Multitasking Neural Networks Balance Specialization With Flexibility

Each hand of the card game euchre has a different trump suit, and the values of the trump cards follow an arbitrary pattern. For every play you make, you must remember multiple abstract rules and combine them appropriately with your observations about the state of the game. Somehow, our brains are able to learn and implement a seemingly unlimited number of such rules over the course of different hands, different card games and different tasks of many kinds, without forgetting what was learned before.

In a new study published in Nature Neuroscience in January, Xiao-Jing Wang’s group at New York University used artificial neural networks to investigate how the brain can do this. “We originally wanted to understand why there are multiple areas in the brain,” says Guangyu Robert Yang, a former graduate student in the Wang lab and now a junior fellow of the Simons Society of Fellows. “The intuition was that because you need to specialize to do many tasks, you will have multiple areas.”

The investigators trained their neural networks to successfully perform 20 interrelated cognitive tasks. Each network was trained on all 20 tasks simultaneously. These included commonly studied decision-making and visual-discrimination tasks, sometimes including delay periods to engage working-memory processes. The researchers then looked inside the networks to understand how they work.

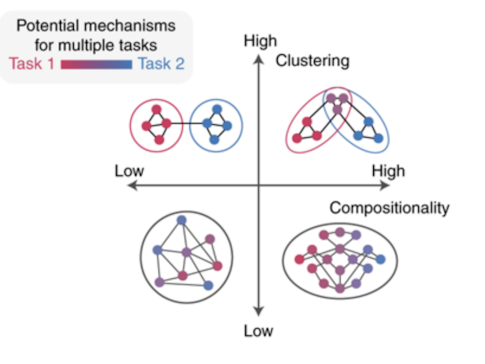

They found that after training, the networks develop clusters of units that are each active during a subset of tasks. By inactivating each cluster and observing the effect on task performance, they found that the clusters are often dedicated to specific functions. Some represent visual inputs, others compare or interpret those inputs, and some are responsible for generating a final response.

Learning how networks implement clustering could help us understand the prefrontal cortex (PFC), the brain region responsible for much of our ability to perform these kinds of complex cognitive tasks. The neural networks in the study — tiny compared with even a subregion of the PFC — are able to identify common sensory and cognitive processes among the required tasks and cluster units to perform them. This makes them perfect for studying the factors that give rise to clusters and how large and interconnected clusters should be.

The trained networks also display some signatures of ‘compositionality,’ the ability to combine basic computational elements to solve more complex and even completely novel problems. This ability is an important building block of cognition. In some cases, the networks can, without explicit training, perform tasks based on rules that are a composite of multiple previously learned rules. For example, imagine a task with the rule [Wait AND GoLeft]. The network can also perform the task correctly after being given the three rules [GoLeft], [Wait AND GoRight], and NOT[GoRight]. This indicates that the network is able to extract and generalize the essential components of the rules.

Finally, the researchers wanted to know what happens when the networks are forced to learn tasks sequentially, instead of simultaneously. Learning tasks one at a time is what human brains do, and it comes with an additional challenge: how to alter the network structure to perform something new without disrupting what was learned before. The team found that sequentially trained networks assign additional jobs to already-employed neurons, resulting in a high proportion of neurons that respond to multiple task variables. This is a common feature of real PFC neurons, known as ‘mixed selectivity.’

Mixed selectivity gives a network flexibility because it can recruit neurons to do multiple tasks and learn new things quickly when necessary. However, if a particular job is important and used often, a dedicated cluster can do the job most efficiently. The need for a balance between them could explain puzzling observations in PFC data. “Many representations we observe in the brain are not necessarily the optimal solution to the task at hand, because the brain has to make many compromises,” Yang says. “It can’t throw away everything it has learned before. Often, people don’t really think about the neural mechanism in the context of all the other things the network has to do.”

Researchers are planning to use the networks to generate predictions that can be tested using electrophysiological studies. This approach will help experimentalists choose the most appropriate tasks to answer specific questions about cortical computation. “Our network is very simple compared to what can be done now in machine learning, but we spent a lot of time understanding how it works,” Yang says. “The model can be tested in experiments in which human or nonhuman subjects are required to carry out multiple cognitive tasks, ideally designed to have a compositional structure.”