How the Brain Uses Neural Sequences in Navigation, Memory and Beyond

Two-photon imaging, a technique for recording the activity of large populations of neurons, has revolutionized neuroscience. It’s given researchers unprecedented access to the language of the brain at the cellular and even subcellular level. David Tank, director of the Simons Collaboration on the Global Brain and a neuroscientist at Princeton University, was part of the group to first use the technique to study a living brain in the 1990s. Over the last 25 years, Tank and collaborators have continued to refine and expand the technique. Most notably, they have used it to study neural sequences—repeated patterns of neural activity that may play an important role in neural computation.

One of the first cases where scientists noticed neural sequences was in studies of navigation. When an animal traveled along a specific path, individual neurons in a population fired in an ordered, reproducible pattern. The activity of specific neurons corresponded to the animal’s position. Tank and others have since shown that neural sequences are a common feature of the brain, occurring in a number of different regions. Growing evidence suggests they are important in an array of cognitive tasks, including working memory and decision-making.

Tank described some of his work in the Albert and Ellen Grass lecture “Neural Sequences in Memory and Cognition” at the Society for Neuroscience conference in San Diego in November 2018. Here, he talks with SCGB about how his lab has used calcium imaging, multielectrode recording and virtual reality to study neural sequences and their role in cognition. An edited version of the interview follows.

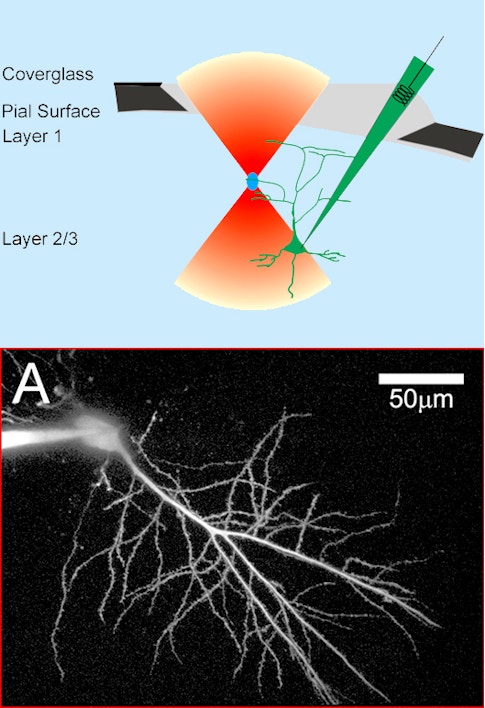

Bottom: Two photon image of a CA1 neuron filled with a fluorescent dextran. Credit: Svoboda, Tank & Denk, Science (1996)

You were part of a team at Bell Labs that first applied two-photon imaging to the living brain in the 1990s. What was it like working there at that time?

Winfried Denk, working in Watt Webb’s lab at Cornell University, produced the first demonstration of two-photon excitation fluorescence imaging in 1990. A few years later, we were lucky enough to recruit Denk to Bell Labs into a group that ultimately became the Biological Computation Research department. In collaboration with Denk, Karel Svoboda, David Kleinfeld and I did the first in vivo two-photon imaging of calcium transients from individual neurons in the intact rodent brain. Using a microelectrode, we filled neurons in an anesthetized rat with a calcium indicator and imaged fluorescence changes in the cell body and the cell dendrites through a glass coverslip forming a cranial window over the neocortex. Our paper was published in 1997. It was a very exciting time, and the opportunities seemed endless, but first it was necessary to transition the technology to awake animals. That transition involved many iterative steps such as methods to label large neural populations, and behavioral methods, like head-restrained virtual reality, to facilitate imaging with tabletop microscopes. We also experimented with head-mounted two-photon microscopes.

How have you since put that technology to work?

I moved to Princeton in 2001, and, working with a talented set of postdocs and graduate students (Tom Adelman, Forrest Collman, Daniel Dombeck, Chris Harvey, Anton Khabbaz), I developed a spherical treadmill for head-restrained mice. Initially used by Khabbaz in experiments using electrodes to study head-direction cells in the thalamus, this technology was extended by Dombeck, now at Northwestern, who developed a method for combining the spherical treadmill with the two-photon laser scanning microscope and a surgically implanted cranial window. Capitalizing on a method pioneered by Arthur Konnerth to label entire populations of neurons with calcium indicators injected into the brain, we were then able to do the first recordings of calcium dynamics from entire neural populations during awake behavior, when the mouse was running on the treadmill. This was the beginning of large-scale optical recording in awake rodents.

Chris Harvey, now at Harvard University, combined the treadmill with a projection system and hacked gaming software developed by Forrest Collman, now at the Allen Institute, to render a virtual environment on a screen surrounding the mouse. Harvey successfully trained mice to run back and forth along a linear track, receiving water rewards. The stability of the animal’s head in the system was amazing—good enough to do the first intracellular recordings of place cells during navigation. Very shortly thereafter, Daniel Dombeck imaged hippocampal place cells during virtual navigation. There are many systems like this now in use—almost an ecosystem of virtual reality setups. Over the last few years, we (and many others) have improved the method, and can record from thousands of neurons simultaneously.

Where do you see neural sequences?

A classic example are place cells: As an animal runs down a track, each place cell becomes active in succession, creating a characteristic sequence. We didn’t discover these sequences, but we were the ones to first image them, using large-scale calcium imaging in virtual reality.

When did you first observe neural sequences?

Dombeck and Harvey combined two-photon microscopy, the treadmill and virtual reality to image hippocampal place-cell sequences. Neurons in the dorsal hippocampus fired in a sequence as animals ran from left to right. It was a direct demonstration using optical imaging of something that had been known using electrophysiology since the discovery of place cells. You have bumps of activity that tile the environment.

Why is it important to be able to look at lots of cells?

Sometimes, sequences can occur independent of the exact sequence of behavior, because they seem to be based on an internal cognitive behavioral variable. You can’t record individual cells on different trials because the exact sequence might not be observed at the same time or position during behavior. It’s only because you can record them all at once that you see it’s a sequence.

Your lab recently described a new kind of cell: reward cells. What are they?

In July 2018, Jeff Gauthier and I published a paper in Neuron in which we were able to identify subpopulations of cells that were more active in parts of the track linked to a reward. In the experiment, mice ran from one end of a track to another, exhibiting well-defined sequences in their place cells. Jeff moved the location of the reward in the virtual reality space and compared activity of the same neurons across different sessions. He found two types of cells. Some fired at the end of the track regardless of reward location. Others fired just before reaching the reward location. Their preferred location would shift with the location of reward. We call these reward cells.

Control experiments and analyses demonstrated that the cells are correlated specifically with reward rather than anticipation of a change of behavior, like slowing down, that is associated with rewards. Interestingly, different reward cells became active at different times relative to a reward, and thus their activity as a population formed a neural sequence. An analysis of the sequences suggested that they might also represent additional cognitive information such as the confidence of an upcoming reward.

How have you used virtual reality to explore cell sequences outside of spatial navigation?

Dmitriy Aronov, a postdoc in my lab who is now at Columbia University, developed a two-dimensional version of the rodent virtual reality environment. He found traditional place cells, grid cells, head-direction cells and even border cells. He also showed that if you slowly rotate the virtual arena relative to the fixed coordinates of the lab, place cells stayed fixed relative to the rotating virtual arena. Despite some stationary cues, the rat was deeply engaged cognitively with the virtual environment.

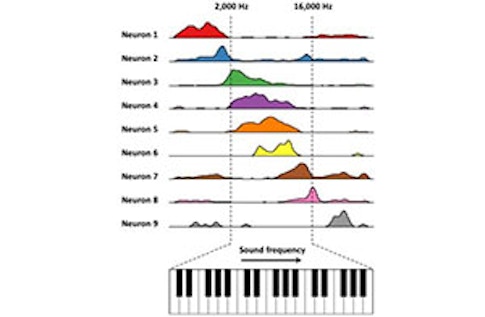

Based on that finding, we wondered whether we could use artificial cognitive environments to probe a long-standing question: Is the hippocampus involved in specialized spatial responses or more general cognitive functions? Can you find cells that represent a different continuous variable, such as sound frequency? We created a sound-manipulation task, where the rat presses a lever to activate a tone. The tone changes in frequency in proportion to how far the lever is pressed. The rat is trained to release the lever when it hears a target frequency. Aronov varied how quickly the frequency changed as a function of a given lever press on each trial, so the animal couldn’t memorize the time when it needed to release the lever. It had to interact with the changing sound frequency.

What did you find?

In the dorsal hippocampus, some of the recorded cells appear to be coding a particular frequency—they are frequency place cells. But neurons don’t just respond to sound. Other neurons seem to fire in response to other specific parts of the behavior, from pressing to releasing the lever, creating a sequence of neural activity that tiles the entire task. On trials where sound frequency changes quickly, the sequence occurs rapidly. On slow trials, the sequence occurs at a slower pace. [For more on this experiment, see “Hippocampus’ navigation system goes beyond spatial mapping.”] Similar firing fields and sequences spanning the trial were measured in the entorhinal cortex, from neurons that we later identified as grid cells and border cells during navigation in a traditional spatial environment.

Other labs, such as those of Howard Eichenbaum and Györgi Buzáki, have detected sequences in rodent hippocampi that represent other cognitive variables, such as elapsed time or the memory of an odor. This suggests a general idea: In the dorsal hippocampus, neural sequences are elicited parametrically with progress through the behavior, and the population codes that are elicited represent task-relevant variables.

How do neural sequences play a role in working memory and decision-making?

Harvey developed a task in which mice had to choose one of two arms in a T maze; a cue in the stem of the maze tells the animal whether to turn left or right. There’s a delay before the animal makes the decision, so it’s a combined working memory, decision-making and navigation task. Using two-photon imaging and virtual reality, Harvey observed a sequence of activity in the parietal cortex that splits in two. The trajectories diverge during the delay period. We called them “choice-specific sequences;” they encode the upcoming choice, left or right. The distance between the two trajectories increases with time, as does the ability to predict whether the animal will turn left or right.

Are these sequences restricted to certain parts of the brain?

Sue Ann Koay, a postdoc at Princeton working on our multi-PI BrainCogs project, has imaged across different areas during a related task and finds choice-specific sequences that tile the behavior in each area. We can decode virtually everything about the behavioral experiment from the activity in these sequences. As neural trajectories diverge, we can accurately predict whether the animal will choose left or right. We can also decode the number of cues the animal saw on the left versus right in a graded fashion, if the animal will get a reward and if it got a reward on the previous trial. That’s really amazing.

What function do these sequences perform in the brain?

Everyone is trying to make sense of these representations. What do they mean? Are they representations for learning, policymaking, planning? Or are they an epiphenomenon? We don’t know yet. There are tantalizing attempts to look at function with optogenetic perturbation. Lucas Pinto, also on the BrainCogs project, has silenced parts of the dorsal cortex with a laser as the animal performed the behavior. In all areas where sequences are observed, we see perturbation of behavior. That’s not the case for a control task, where a direct cue tells animals whether to turn left or right. It’s specific to the cognitive task. Another question is how they relate to other types of sequences. For example, snippets of the sequences we observe in the hippocampus that tile behavior show up again in fast sequences that occur during sharp wave ripples. The “slow” sequences that tile the behavior may be setting up a brain representation that is then exploited during fast sequences to plan actions or help make decisions. Finally, sequences are observed in premotor areas during sequential behavior, as in birdsong. Is it a general property of cortex to build representations that manifest as sequences?

What needs to happen in order to make sense of these sequences?

It’s very early days. We know that sequences contain information about evidence in the task up to that point, about the upcoming choice, about the previous trial’s choice and the previous trial’s reward. They seem to be a representation of the entire behavioral state and the history of the animal. We have to think about how that fits into a picture of cognition. That’s pretty mysterious right now. Another big question concerns the circuit mechanisms. Are the sequences we observe in a given region produced locally within the circuit, or do they represent information broadcast globally across cortical areas to aid in interpretation of local computations?

Are you surprised by the ubiquity of neural sequences in the brain?

It’s not surprising that the brain shows neural sequences—it manifests the sequential nature of behavior. What is surprising is that they seem to represent more abstract things related to the history of the animal’s behavior. In addition, these sequences can decouple in interesting ways from the sensory stimulus itself. They are not just driven by sensory stimulus but are internally generated in some sense. For example, when an animal is running down the stem of a T maze in [a] delay match to sample experiments, the neocortex shows different sequences of activity depending on what cue the animal saw at the beginning of the trial. The environment in the stem of the maze looks exactly the same; what’s different is the animal’s interpretation of it. It has to remember the previous stimulus and what task it’s doing, and put those variables into a model of the world and a model of what it’s doing. In general, I think we need new tools to detect and analyze sequences in neural data [See ‘Finding Neural Patterns in the Din’], and also new models and theories that explore the computational capabilities of sequences and the neural circuit representations that they embody