Two Neuroscience Laws Governing How We Sense the World Finally United After 67 Years

They’re two similar-sounding questions: How bright is this light? And was the left light brighter than the right one? Yet for 67 years, neuroscientists and psychologists have used two different mathematical laws to describe our ability to absolutely and relatively gauge what our senses are telling us.

In a paper published June 10 in the journal Proceedings of the National Academy of Sciences, researchers at the Flatiron Institute’s Center for Computational Neuroscience (CCN) finally unite the two laws using a new theoretical framework.

The breakthrough paves the way for further research into the connections between our brain’s biological processes and the computations that they perform that allow us to perceive the world, says CCN director and study coauthor Eero Simoncelli. “The scientific goal is to build a framework that describes those connections and relationships, which allows both the perceptual and the neurobiological sides of the field to interact with each other,” says Simoncelli, who is also a Silver professor of neural science, mathematics, data science and psychology at New York University (NYU).

Two laws seem to govern many of our senses, from identifying the sweeter cookie to determining just how loud that annoying car alarm is.

Ernst Heinrich Weber developed his law of perceptual sensitivity in 1834. Weber’s law says that our ability to tell whether two stimuli differ depends on the proportional difference between the two. For example, if you lift a five-pound dumbbell and someone adds two pounds to it, you will probably notice the increase. But if someone increases a 50-pound dumbbell by the same amount, you’ll have a harder time telling the difference.

In 1957, Stanley Smith Stevens proposed another law, stating that our perception of stimulus intensity increases as the stimulus strength is raised to an exponent. That exponent varies widely depending on whether a participant is ascertaining brightness, loudness or heaviness.

While Weber’s law applies to relative assessments of stimuli and Stevens’ law applies to absolute measurements, both laws aim to clarify the internal representations behind perception judgments. Yet the two laws use different mathematical equations that don’t link up with each other. The two laws appear inconsistent with each other, and for decades, scientists have failed to develop a single explanation — and corresponding equation — for both scenarios.

Simoncelli and his team used mathematics and computational modeling to show that Weber’s law and Stevens’ power law can coexist. At the heart of their framework is the idea that our ability to gauge the magnitude of a stimulus (for instance, on a scale from 1 to 10) reflects how we internalize our sensory inputs based on factors such as our memories of similar experiences.

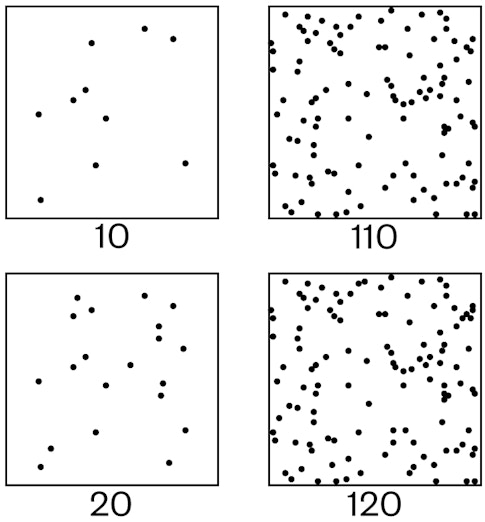

When we compare two stimuli, another important factor comes into play: noise. Random fluctuations in the brain’s neural networks result in natural variability in the representation of sensory inputs. This in particular affects our ability to tell stimuli apart. The Simoncelli team’s new framework expands on the idea that we can detect a change in stimulus only when our internalized representation of that change is larger than the variability, or noise, involved in our brain’s response to the stimulus. Working in reverse, measurements of perceptual sensitivity can be related to the variability of underlying neural responses.

By incorporating both the internalized representation and the noise, the new framework allows a reconciliation of Weber’s and Stevens’ laws.

The unified framework explains previously observed discrepancies between measurements of absolute and relative perception. The new framework accurately predicts participants’ ratings from previous studies across various ways of sensing, from tasting sugar to hearing white noise.

Although many researchers use either sensitivity or intensity rating scales, “rarely is anyone looking at these two types of data together,” says study lead author Jingyang Zhou, a CCN postdoctoral fellow and researcher at NYU’s Center for Neural Science. Therefore, finding datasets to analyze was challenging, and Zhou hopes that more researchers will consider collecting both types of data in the future to better understand the internal mechanism of perception and provide further tests for the new framework.

“My hope would be that this step toward unification of a large set of disparate perceptual and physiological measurements can provide a launching point for future investigation,” Simoncelli says.