In Mapping Mistakes, a Window to the Inner Mind

Several years ago, I participated in a study where I had to play a computer game that became progressively more difficult. To my surprise, the scientist running the study told me I performed better on the harder versions of the game. He said that wasn’t unusual — some people stay engaged when the game is more challenging but get bored during the easier trials and lose focus.

Mistakes like these are known in the literature as lapses, instances where you have all the information to make a decision but still make the wrong choice. Descriptions of lapses litter the decision-making field, occurring in a variety of species and situations. People asked to report obvious flashes of light sometimes miss them, even when they can detect much more subtle cues. Rodents routinely make mistakes in 10 to 20 percent of decision-making trials, despite sometimes performing with nearly 100 percent accuracy.

Scientists have put forth a number of explanations for lapses, ranging from lack of attention to motor mistakes, such as accidentally pressing the wrong button. But existing theories don’t satisfactorily explain the various patterns of lapses — why they may occur in bouts or sometimes affect easier or more ambiguous trials, for example. New efforts to study decision-making that move beyond the standard input-output approach offer an alternative avenue for studying lapses. These efforts track the brain’s internal state and how it affects the way animals use sensory signals to make decisions.

“What’s always made decisions interesting is that incoming signals don’t operate in a reflexive way,” says Anne Churchland, a neuroscientist at the University of California, Los Angeles and an investigator with the Simons Collaboration on the Global Brain. One morning you might brake at a yellow light, for example, while the next day you might hit the gas. “The same stimulus will do different things depending on what state the brain is in, and we are only starting to be able to characterize those states.”

Most studies of decision-making have assumed that animals are in the same state — using the same decision-making strategy — throughout the course of an experiment. “It’s wrong to think of the brain as in the same state over time, but that’s what everyone has always done,” says Ilana Witten, a neuroscientist at Princeton University and an SCGB investigator. New research from Churchland, Witten and others demonstrates how that state can shift over time, switching among different strategies, and highlights the profound impact an animal’s internal state can have on its behavior.

Searching for hidden states

At first glance, male fruit flies can behave unpredictably when attempting to woo a mate. Sometimes they actively chase a nearby female, while other times they appear to ignore her. In research published in 2019, then-SCGB fellow Adam Calhoun, SCGB investigators Mala Murthy and Jonathan Pillow took a more formal look at this variability. They found that the ability to predict a fly’s courting behavior from its sensory cues varied, and they hypothesized that male flies, who sing to woo females, might change their song strategies over time. To explore these changes, they analyzed males’ behavior using hidden Markov modeling (HMM), which can help find patterns in otherwise noisy data. The study revealed that male flies could be in one of three different states — close to a female but moving slowly, actively chasing a female, or uninterested in the female. How the fly used sensory information to guide his song depended on his state. “We have lacked tools for identifying internal states, but this approach allows us to identify changes in state from the basis of behavior alone,” says Pillow, a computational neuroscientist at Princeton University. (For more, see “How Fruit Flies Woo a Mate.”)

Mice trained on decision-making tasks show a similarly intriguing pattern of behavior. Sometimes they can perform the task highly accurately. But sometimes they make mistakes, which don’t necessarily correlate with the difficulty of the task. Indeed, researchers studying rodents note that the animals routinely fail on 10 to 20 percent of trials. Masses of standardized decision-making data produced by the International Brain Lab (IBL), a collaboration among 22 labs all implementing the same task, offered an opportunity to dig deeper into this observation. “The IBL and the rise of mouse decision-making in general inspired us to look at decision-making in a way that we hadn’t before,” Pillow says. (For more on the IBL, see “Building a New Model for Neuroscience Research.”)

In the IBL task, mice turn a wheel to indicate whether a grating with different levels of contrast appeared on the left or right. In a classic model of lapses, animals are either engaged or disengaged, with most lapses occurring in the latter state. But in analyzing animals’ performance with an HMM, Pillow, graduate student Zoe Ashwood and collaborators found that three states best explained the data — an engaged state, where the animal performed almost perfectly, and two disengaged states, where the animal tended to stick with a left or right choice, regardless of sensory information. The states persisted across dozens of trials and occurred in the vast majority of animals. “This paper resonates because it offers a way of quantifying an effect researchers had been aware of but hadn’t been able to quantify,” Pillow says.

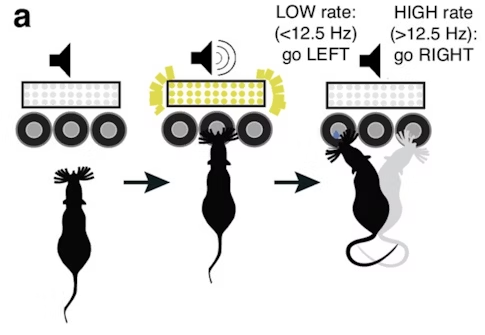

To make sure the findings weren’t specific to the IBL task, researchers analyzed two other datasets. In a mouse study in which animals had to report whether they heard a low- or high-frequency sound, the model identified four states — engaged, biased left, biased right and ‘win-stay,’ where animals stick with their previous rewarded choice. In a human study of visual discrimination, in which people had to decide whether a group of moving dots was more or less coherent than the previous stimulus, two- and three-state models better explained the data than the classical lapse model. The findings were published in Nature Neuroscience in February.

Scientists don’t yet know what triggers the switch between states. HMMs predict the probability that an animal will move from one state to another, but they do not capture the factors that drive the shift. Researchers plan to explore this question by applying linear regression models to identify whether satiety, fatigue, recent rewards and other variables can help predict whether an animal will transition to another state.

The state of circuits

The clear observation of different decision-making states begs the question — what is happening in the brain during these bouts? Witten, Pillow and collaborators are using a combination of virtual reality, neural recordings and optogenetic perturbation — silencing specific neurons with light — to explore how different neural circuits contribute to different states.

In a study published in Nature Neuroscience in March, researchers recorded neural activity in the striatum as animals navigated a virtual maze, deciding which way to turn based on visual cues. “We had qualitatively observed that mice did not use the same strategy across time but did not have a way to quantify it,” Witten says. Her team had also noticed that animals often didn’t respond to optogenetic perturbations in the same way — silencing a set of neurons might affect the animal’s decision under some circumstances but not others or on some trials but not all. “But we didn’t have a way to look at it,” she says.

The team applied the same HMM model to their data, with surprising results. Animals could be in one of three different states as they navigated the maze — a disengaged state in which they tended to repeat the previous choice, or one of two engaged states that outwardly looked the same but responded differently to perturbation. In one state, silencing neurons in part of the striatum influenced the animal’s choice. In another, silencing had no effect. “We did not expect such an enormous effect,” Witten says. “Reviewers were very skeptical because it was so surprising. We had to do a lot of controls to get people to believe it.”

The findings suggest that the animal is employing different neural pathways to solve the task. “You might imagine when an animal is engaged, the same decision circuit is involved, but this paper reveals that’s not the case,” Pillow says. “Even when the animal is engaged, there is one state where it relies on the striatum and another where it does not.” These different strategies are not apparent from behavior alone. “I think it speaks to redundancy,” Witten says. “It suggests parallel brain circuits can achieve similar functions.”

As is the case for the IBL study, researchers don’t yet know what triggers the shift among different strategies. Witten’s team is exploring the idea that neuromodulators, which have huge projections across the brain and have been implicated in internal states, play a role. High levels of norepinephrine, for example, may push the brain into a striatum-dependent state. To explore this question, researchers are measuring levels of different neuromodulators to see if their activity predicts state transitions.

Another major question is why an animal would switch among strategies that outwardly have the same impact — the animal makes the same choices — but internally rely on different circuitry. “It’s an exciting direction to go,” Pillow says. “What are these states good for? Are they important for learning? Does it help the animal keep track of different statistics of the environment or to keep track of behavior?” Researchers also plan to examine whether downstream brain regions show similar differences. “I don’t think state dependence is striatum-dependent,” Witten says. “I think it’s a brain state that changes.”

Pillow’s group is now collaborating with others to apply their approach to different types of data. They are working with SCGB investigator Carlos Brody and SCGB director David Tank, both at Princeton, to inactivate parts of the brain, such as the hippocampus, to find states that are vulnerable or resilient to inactivation and that therefore rely on different brain circuits.

The code the researchers used is publicly available and is usable with most datasets, Witten says. “I think if people ran the same analysis on their data, they will get a similar phenomenon.” She expects more and more people will apply the approach. “It explains so much.”

Lapsing into exploration

Though some lapses are well explained by inattention, others may reflect a more active process — the brain exploring new strategies, for example. Churchland’s lab was inspired to examine this possibility after noticing a trend in studies of multisensory decision-making. Both people and rodents were more likely to make mistakes when they were given just visual or just auditory information to make a decision, rather than both together. “We fretted about it a lot and did not have an explanation,” Churchland says. Simple lack of attention wasn’t a satisfactory explanation. “Why would animals be more likely to snooze on a unisensory trial?” she says.

One of Churchland’s students, Sashank Pisupati, came up with an alternative explanation — perhaps lapses are tied to uncertainty and exploration. When you’re learning a new game, for example, you may try different strategies, perhaps making a risky choice to see if it will deliver a large reward. “Often an optimal strategy is to balance exploration and exploitation,” Churchland says. “You don’t assume you know the game perfectly, so you make exploratory choices on a few trials to test the theory.” While this idea is common in the world of probabilistic decision-making, where there are no guarantees of right or wrong choices, researchers studying perceptual decision-making don’t typically incorporate the concept of exploration. “We assumed animals knew the rules,” Churchland says. “If they make a mistake, we thought it was from perceptual uncertainty.”

Exploration can be risky, so one strategy for balancing the risks and benefits is to choose the rewarded option when reward is certain but to explore when the likelihood of reward is lower. This would explain why animals are less likely to lapse — explore — when they have both auditory and visual information to support their choice. “Exploration is driven by uncertainty in information,” Churchland says.

In research published in eLife last year, Churchland, Pisupati, then-graduate student Lital Chartarifsky-Lynn and collaborators tested this hypothesis by altering the probability of reward on left and right choices to bias animals toward an exploration or exploitation state. They then analyzed the rates of resulting lapses. Just as they had predicted, animals were less likely to lapse when they had a high expectation of reward. “By studying multisensory integration, we stumbled on this new explanation of lapses that we think is relevant to decision-making very generally,” Churchland says.

The two approaches — the HMM state model and uncertainty-guided exploration — are not mutually exclusive and address two distinct aspects of lapses, Churchland says. “Both things combined drive animals and humans to make lapses, and there are likely other explanations as well.” In both cases, an animal’s likelihood of lapsing reflects an internal representation — the brain’s estimate of uncertainty or its level of engagement — that shapes how sensory information is interpreted and guides how it’s used. Pillow and Churchland are now thinking about how to combine the two approaches to lapses in order to explore, for instance, whether there are extended states of exploration and exploitation, as they found with engaged and disengaged states.