Face Recognition Relies on Surprisingly Simple Code

Looking across a crowded theater, most of us can instantly recognize our friends, even if it’s dark or their faces are partly obscured. How exactly does the brain accomplish this feat? New research has uncovered a simple code that neurons use to process facial information.

The findings, published in Cell in June, suggest that the face-processing neurons don’t respond to a specific person. Instead, they encode specific features of faces, such as the distance between the eyes. “This new study represents the culmination of almost two decades of research trying to crack the code of facial identity,” Doris Tsao, a neuroscientist at the California Institute of Technology (Caltech), said in a news release. “It’s very exciting because our results show that this code is actually very simple.”

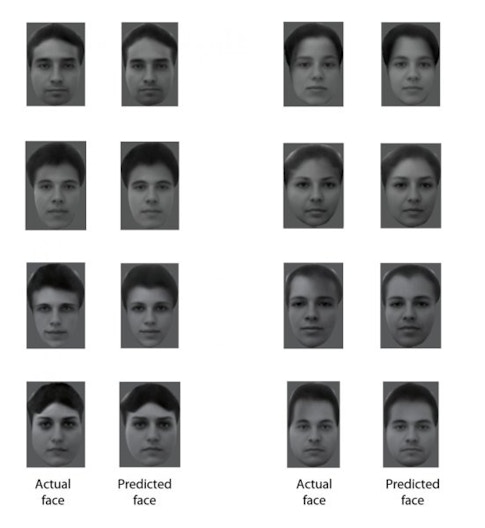

Tsao and postdoctoral researcher Steven Li Chang were able to decode these neurons so accurately that they could reconstruct the faces used in the experiment by harnessing the output of only about 200 neurons. “This was mind-blowing,” Tsao told Scientific American. “The values of each dial are so predictable that we can re-create the face that a monkey sees, by simply tracking the electrical activity of its face cells.”

Tsao, an investigator with the Simons Collaboration on the Global Brain, had previously identified a collection of face patches — clumps of cells that respond preferentially to faces — in the inferior temporal cortex. Scientists have debated for years exactly how these cells give us such exquisite precision when it comes to recognizing faces. Some evidence supports the ‘grandmother cell’ theory of face processing, which proposes that each face cell is dedicated to one or a few individuals.

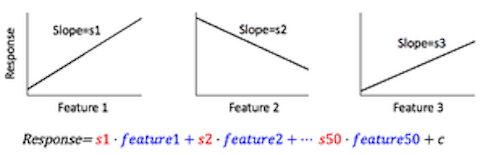

The new findings challenge that idea. Rather than each cell coding for a specific face, Tsao and Chang showed that individual cells represent a specific axis within a multidimensional space, dubbed ‘face space.’ According to Cell Press, “In the same way that red, blue, and green light combine in different ways to create every possible color on the spectrum, these axes can combine in different ways to create every possible face.”

The researchers first mapped out a 50-dimensional face space — 25 dimensions coded for spatial features, such as the space between the eyes. Another 25 coded for other factors, such as skin tone. Using neural activity recorded from individual face cells in macaque monkeys as they looked at images of faces, the researchers found that each neuron responds in a predictable, linear way to specific features — or dimensions — in the 50-dimensional space. An individual cell’s response to a face is simply the linear combination of its responses to each feature.

“We were stunned that, deep in the brain’s visual system, the neurons are actually doing simple linear algebra. Each cell is literally taking a 50-dimensional vector space — face space — and projecting it onto a one-dimensional subspace. It was a revelation to see that each cell indeed has a 49-dimensional null space; this completely overturns the long-standing idea that single face cells are coding specific facial identities. Instead, what we’ve found is that these cells are beautifully simple linear projection machines,” Tsao told Caltech.

Moreover, the finding “demolishes the vague intuition everyone tacitly shared about face cells — that they should be tuned to specific exemplars — even if broadly,” Tsao says.

Once researchers deciphered how these cells respond to faces, they developed a model that could synthesize activity from different cells to recreate a given face. Activity recorded from roughly 200 cells across two different face patches was sufficient to reconstruct faces. “People always say a picture is worth a thousand words,” Tsao told Cell Press. “But I like to say that a picture of a face is worth about 200 neurons.”

The findings could have implications beyond face processing, outlining a type of code that might be used to encode other complex objects. “The way the brain processes this kind of information doesn’t have to be a black box,” Chang told Cell Press. “Although there are many steps of computations between the image we see and the responses of face cells, the code of these face cells turned out to be quite simple once we found the proper axes. This work suggests that other objects could be encoded with similarly simple coordinate systems.”

While Tsao’s face-patch neurons don’t act like grandmother cells, such cells probably do exist in other parts of the brain. A headline-grabbing study published in 2005 showed that individual cells in a different part of the human brain, the medial temporal lobe, can respond selectively to specific people, such as Jennifer Aniston or Halle Barry. These cells likely respond to the concept of a person — such as their name or image — rather than simply their face.