2015 MPS Annual Meeting

Download the 2015 Annual Meeting booklet (PDF).

The Mathematics and Physical Sciences Annual Meeting gathered together Simons Investigators, Simons Fellows, Simons Society of Fellows and Math + X Chairs and Investigators to exchange ideas through lectures and informal discussions in a scientifically stimulating environment.

Talks

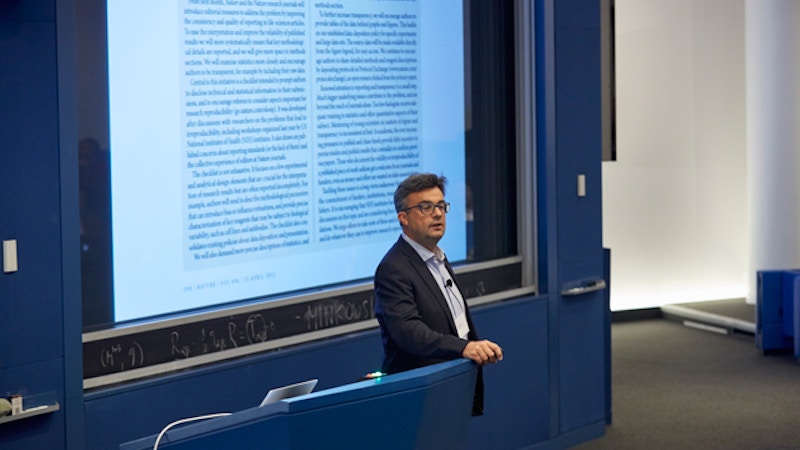

Emmanuel J. Candès

Stanford University

Around the Reproducibility of Scientific Research in the Big Data Era: What Statistics Can Offer

The big data era has created a new scientific paradigm: collect data first, ask questions later. When the universe of scientific hypotheses that are being examined simultaneously is not taken account, inferences are likely to be false. The consequence is that follow up studies are likely not to be able to reproduce earlier reported findings or discoveries. This reproducibility failure bears a substantial cost and this talk is about new statistical tools to address this issue. In the last two decades, statisticians have developed many techniques for addressing this look-everywhere effect, whose proper use would help in alleviating the problems discussed above. This lecture will discuss some of these proposed solutions including the Benjamin-Hochberg procedure for false discovery rate (FDR) control and the knockoff filter, a method which reliably selects which of the many potentially explanatory variables of interest (e.g. the absence or not of a mutation) are indeed truly associated with the response under study (e.g. the log fold increase in HIV-drug resistance).

Emmanuel Candès is a professor of mathematics, statistics and electrical engineering, and a member of the Institute of Computational and Mathematical Engineering at Stanford University. Prior to his appointment as a Simons Chair, Candès was the Ronald and Maxine Linde Professor of Applied and Computational Mathematics at the California Institute of Technology. His research interests are in computational harmonic analysis, statistics, information theory, signal processing and mathematical optimization with applications to the imaging sciences, scientific computing and inverse problems. He received his Ph.D. in statistics from Stanford University in 1998.

Candès has received numerous awards throughout his career, most notably the 2006 Alan T. Waterman Medal — the highest honor presented by the National Science Foundation — which recognizes the achievements of scientists who are no older than 35, or not more than seven years beyond their doctorate. Other honors include the 2005 James H. Wilkinson Prize in Numerical Analysis and Scientific Computing awarded by the Society of Industrial and Applied Mathematics (SIAM), the 2008 Information Theory Society Paper Award, the 2010 George Pólya Prize awarded by SIAM, the 2011 Collatz Prize awarded by the International Council for Industrial and Applied Mathematics (ICIAM), the 2012 Lagrange Prize in Continuous Optimization awarded jointly by the Mathematical Optimization Society (MOS) and Society of Industrial and Applied Mathematics (SIAM), and the 2013 Dannie Heineman Prize presented by the Academy of Sciences at Göttingen. He has given over 50 plenary lectures at major international conferences, not only in mathematics and statistics, but also in several other areas including biomedical imaging and solid-state physics.

In 2014, Candès was elected to the National Academy of Sciences and to the American Academy of Arts and Sciences. This last summer, he gave an Invited Plenary Lecture at the International Congress of Mathematicians, which took place in Seoul. Additionally, one of his Stanford Math+X collaborators, W. E. Moerner, was one of this year’s Nobel Laureates in Chemistry.

Michael Desai

Harvard University

Evolutionary Dynamics When Natural Selection Is Widespread

The basic rules of evolution are straightforward: mutations generate variation, while genetic drift, recombination and natural selection change the frequencies of the variants. Yet it is often surprisingly difficult to predict what is possible in evolution, over what timescales and in which conditions. A key problem is that in many populations, natural selection faces a crucial problem: there is too much going on at once. Many mutations are present simultaneously, and because recombination is limited, selection cannot act on each separately. Rather, mutations are constantly occurring in a variety of combinations linked together on physical chromosomes, and selection can only act on these combinations as a whole. This dramatically reduces the efficiency of natural selection, creates complex correlations across the genome and makes it very difficult to predict how evolution will act. Desai will describe recent work aimed at understanding evolutionary dynamics when selection is widespread, using a combination of mathematical models and experimental evolution in laboratory populations of budding yeast.

Michael Desai combines theoretical and experimental work to bring quantitative methodology to the field of evolutionary dynamics; he and his group are particularly known for their contributions in the area of statistical genetics.

Sharon Glotzer

University of Michigan

Entropy, Information & Alchemy

For the early alchemists, the transmutation of elements held the key to new materials. Today, colloidal “elements” — that is, nanometer- to micron-size particles — that can be decorated and shaped in an infinity of ways are being used to thermodynamically assemble ordered structures that would astonish the ancient alchemists in their geometric complexity and diversity. Using computer simulation methods built upon the theoretical framework of equilibrium statistical mechanics, we model and simulate these colloidal elements and the interactions among them to predict stable and metastable phases, including crystals, liquid crystals, quasicrystals and even crystals with ultra-large unit cells. Many of these structures are, remarkably, achievable via entropy maximization in the absence of other forces, using only shape. By studying families of colloidal elements and their packings and assemblies, we deduce key elemental requirements for certain classes of structures. Recently, we have shown how extended thermodynamic ensembles may be derived and implemented in computer simulations to perform “digital alchemy,” whereby fast algorithms quickly discover optimal colloidal elements for given target structures. Through digital alchemy, we learn which elemental attributes are most important for thermodynamic stability and assembly propensity, and how information is encoded locally to produce order globally.

Sharon Glotzer is a leader in the use of computer simulations to understand how to manipulate matter at the nano- and meso-scales. Her work in the late 1990s demonstrating the nature and importance of spatially heterogeneous dynamics is regarded as a breakthrough. Her ambitious program of computational studies has revealed much about the organizing principles controlling the creation of predetermined structures from nanoscale building blocks, while her development of a conceptual framework for classifying particle shape and interaction anisotropy (patchiness) and their relation to the ultimate structures the particles form has had a major impact on the new field of “self-assembly’’. Glotzer recently showed that hard tetrahedra self-assemble into a quasicrystal exhibiting a remarkable twelve-fold symmetry with an unexpectedly rich structure of logs formed by stacks of twelve-member rings capped by pentagonal dipyramids.

Patrick Hayden

Stanford University

It from Qubit: First Steps

When Shannon formulated his groundbreaking theory of information in 1948, he did not know what to call its central quantity, a measure of uncertainty. It was von Neumann who recognized Shannon’s formula from statistical physics and suggested the name entropy. This was but the first in a series of remarkable connections between physics and information theory. Later, tantalizing hints from the study of quantum fields and gravity, such as the Bekenstein-Hawking formula for the entropy of a black hole, inspired Wheeler’s famous 1990 exhortation to derive “it from bit,” a three-syllable manifesto asserting that, to properly unify the geometry of general relativity with the indeterminacy of quantum mechanics, it would be necessary to inject fundamentally new ideas from information theory. Wheeler’s vision was sound, but it came twenty-five years early. Only now is it coming to fruition, with the twist that classical bits have given way to the qubits of quantum information theory.

This talk will provide a tour of some of the recent developments at the intersection of quantum information and fundamental physics that are the source of this renewed excitement.

Patrick Hayden’s work on the requirements for secure communication through quantum channels transformed the field of quantum information, establishing a general structure and a set of powerful results that subsumed most of the previous work in the field as special cases. More recently, he has used quantum information theory concepts to obtain new results related to the quantum physics of black holes.

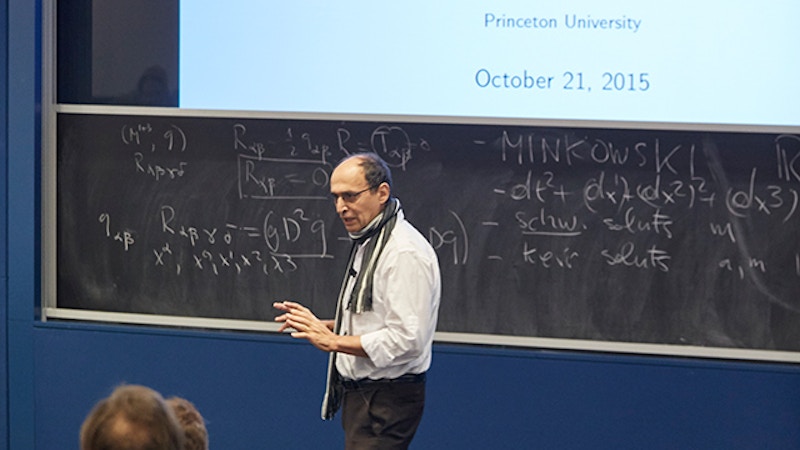

Sergiu Klainerman

Princeton University

Are Black Holes Real?

Black holes are precise mathematical solutions of the Einstein field equations of general relativity. Some of the most exciting astrophysical objects in the universe have been identified as corresponding to these mathematical black holes, but since no signals can escape their extreme gravitational pull, can one be sure that the right identification has been made?

Klainerman will discuss three fundamental mathematical problems concerning black holes, intimately tied to the issue of their physical reality: rigidity, stability and collapse.

Sergiu Klainerman is a PDE analyst with a strong interest in general relativity. His current research deals with the mathematical theory of black holes more precisely on their rigidity and stability. Klainerman is also interested in the dynamic formation of trapped surfaces and singularities.

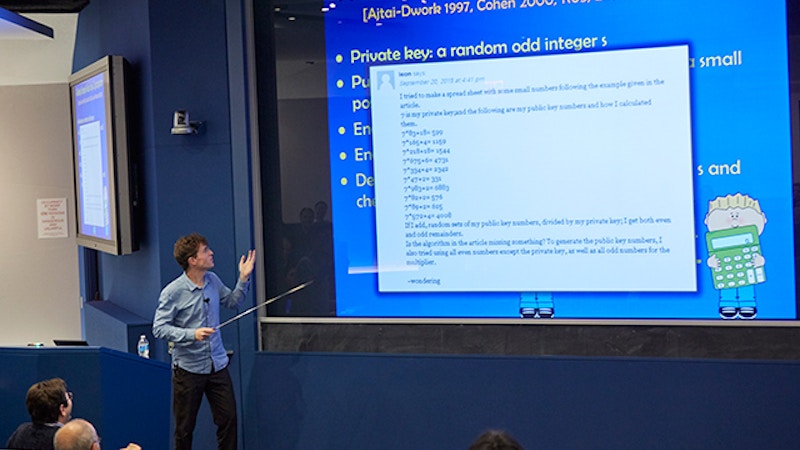

Oded Regev

New York University

Lattice-Based Cryptography

Most of the cryptographic protocols used in everyday life are based on number theoretic problems, such as integer factoring. Regev will give an introduction to lattice-based cryptography, a relatively recent form of cryptography that offers many advantages over traditional number-theoretic-based cryptography, including conjectured security against

quantum computers. Lattice-based cryptography is also remarkably versatile, with dozens of applications, most notably the recent breakthrough work on fully homomorphic encryption by Gentry and others.

Oded Regev is a professor in the Courant Institute of Mathematical Sciences of New York University. Prior to joining NYU, he was affiliated with Tel Aviv University and the École Normale Supérieure, Paris under the French National Centre for Scientific Research (CNRS). He received his Ph.D. in computer science from Tel Aviv University in 2001. He is the recipient of the Wolf Foundation’s Krill Prize for Excellence in Scientific Research in 2005, as well as best paper awards in STOC 2003 and Eurocrypt 2006. He was awarded a European Research Council (ERC) Starting Grant in 2008.

His main research areas include theoretical computer science, cryptography, quantum computation and complexity theory. A main focus of his research is in the area of lattice-based cryptography, where he introduced several key concepts, including the ‘learning with error’ problem and the use of Gaussian measures.

Iain Stewart

Massachusetts Institute of Technology

Factorization: Collider Physics from Universal Functions

High energy collisions of protons and electrons provide a crucial probe for the nature of physics at very short distance scales. Past successes include the discovery of new particles, confirmation of detailed properties of the strong, weak, and electromagnetic forces, and measurement of the few key fundamental parameters of the prevailing “standard model” of particle and nuclear physics. Current colliders like the Large Hadron Collider (LHC) are searching for new types of matter, like a dark matter particle, and for signs of new paradigms, like supersymmetry.

In this talk, Professor Stewart will explain the mathematical underpinnings needed to theoretically predict the outcome of high energy collisions, which relies on the concept of factorization in quantum field theory. Factorization predicts that the complicated collision can be described by combining simpler universal functions. The long-distance processes that take place prior to and after the collision, and the short-distance processes that take place at the collision, are each described by separate functions. The factorization framework can be derived in several special cases, but underlies all theoretical predictions. When applicable, it enables high precision theoretical calculations that can be compared to experimental data, for example, by the properties of jets (sprays of particles) influenced by the strong force. It is also important for the interpretation of experimental measurements, such as those measuring properties of the recently discovered Higgs boson. Besides explaining these concepts, Professor Stewart will also describe a new field theory formalism he has recently developed whose goal is to provide a complete framework to describe violations of factorization, thus enabling “proofs” of factorization for more cases, and providing theoretical tools that can explore the nature of collisions even when factorization is violated.

Iain Stewart works in the physics of elementary particles, investigating fundamental questions in quantum chromodynamics, i.e., the interactions of quarks and gluons via the strong force. He is particularly known for his role in inventing soft collinear effective field theory, a theoretical tool for understanding the particle jets produced by high energy collisions in accelerators such as the LHC. He has established factorization theorems that enable the clear interpretation and physical understanding of the collision products. Methods he has developed have been used in the search for the Higgs boson, to gain new insights into effects of CP violation in B-meson production and to test for beyond-standard-model physics.

Salil P. Vadhan

Harvard University

The Border between Possible and Impossible in Data Privacy

A central paradigm in theoretical computer science is to reason about the space of all possible algorithms for any given problem. That is, we seek to identify an algorithm with the “best” possible performance, and then prove that no algorithm can perform better, no matter how cleverly it is designed. In this talk, I will illustrate how this paradigm has played a central role in the development of differential privacy, a mathematical framework for enabling the statistical analysis of privacy-sensitive datasets while ensuring that information specific to individual data subjects will not be leaked. In particular, we are using it to delineate the border between what is possible and what is impossible in differential privacy, and the effort has uncovered intriguing connections with several other topics in theoretical computer science and mathematics.

Salil Vadhan has produced a series of original and influential papers on computational complexity and cryptography. He uses complexity-theoretic methods and perspectives to delineate the border between the possible and impossible in cryptography and data privacy. His work also illuminates the relation between computational and information-theoretic notions of randomness, thereby enriching the theory of pseudorandomness and its applications. All of these themes are present in Vadhan’s recent papers on differential privacy and on computational analogues of entropy.

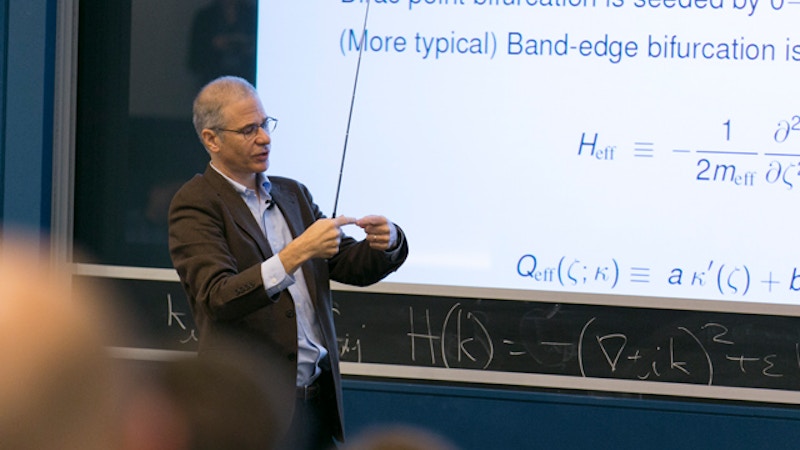

Michael Weinstein

Columbia University

Energy on the Edge: A Mathematical View

In many applications, e.g., photonic and quantum systems, one is interested in controlled localization of wave energy.

We first review the mathematics of periodic media and localization. Edge states are a type of localization along a line-defect, the interface between different media. We then specialize to the case of honeycomb structures (such as graphene and its photonic analogues) and discuss their novel properties. In particular, we examine their potential to form topologically protected edge states, which persist and are stable against strong local distortions of the edge, and are therefore potential vehicles for robust energy transfer in the presence of defects and random imperfections.

Finally, we discuss rigorous results and conjectures for a family of continuum partial differential equation (Schrödinger) models admitting edge states, which are topologically protected, those which are not protected and possibly some which decay but are very long-lived.

Michael Weinstein’s work bridges the areas of fundamental and applied mathematics, physics and engineering. He is known for his elegant and influential mathematical analysis of wave phenomena in diverse and important physical problems. His and his colleagues’ work on singularity formation, stability and nonlinear scattering has been central to the understanding of the dynamics of coherent structures of nonlinear dispersive wave equations arising in nonlinear optics, macroscopic quantum systems and fluid dynamics. This led to work on resonances and radiation in Hamiltonian partial differential equations, with applications to energy flow in photonic and quantum systems. Recently, he has explored wave phenomena in novel structures such as topological insulators and metamaterials.